cephadm 部署ceph集群

记录一下如何使用cephadm去快速部署ceph集群。

cephadm相较于ceph-deploy和ansible部署更加简单方便。

release 版本选择:

https://docs.ceph.com/en/latest/releases/#active-releases

这次选择的是 18.2.4

环境配置

准备了3台机器来做此次的部署,操作系统使用Ubuntu20.04 具体的信息如下:

| hostname | cpu | memory | disk | ip |

|---|---|---|---|---|

| ceph-node1 | 2 | 4G | 20G, 100G | 192.168.122.50 |

| ceph-node2 | 2 | 4G | 20G, 100G | 192.168.122.51 |

| ceph-node3 | 2 | 4G | 20G, 100G | 192.168.122.52 |

需要注意的是这个只是作为测试使用实际生产环境还是要根据实际的需求来去规划和部署ceph集群。

设置主机名:

hostnamectl set-hostname ceph-node1

hostnamectl set-hostname ceph-node2

hostnamectl set-hostname ceph-node3配置hosts:

192.168.122.50 ceph-node1

192.168.122.51 ceph-node2

192.168.122.52 ceph-node3配置国内源,修改/etc/apt/sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# 预发布软件源,不建议启用

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

# # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse修改完成之后更新缓存:

apt-get update时间同步

每个节点上都配置好ntp,同步好时间。

apt-get install chrony -y

systemctl enable chronyd --now免密配置

ceph-node1 作为主要的管理节点,再去做单向免密:

ssh-keygen部署docker

部署docker可以直接使用官方的脚本:

wget -qO- https://get.docker.com/ | shceph部署

下载cephadm

下载cephadm:

CEPH_RELEASE=18.2.4

curl --silent --remote-name --location https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-${CEPH_RELEASE}/el9/noarch/cephadm

mv cephadm /usr/sbin/

chmod +x /usr/sbin/cephadmceph集群部署

初始化配置:

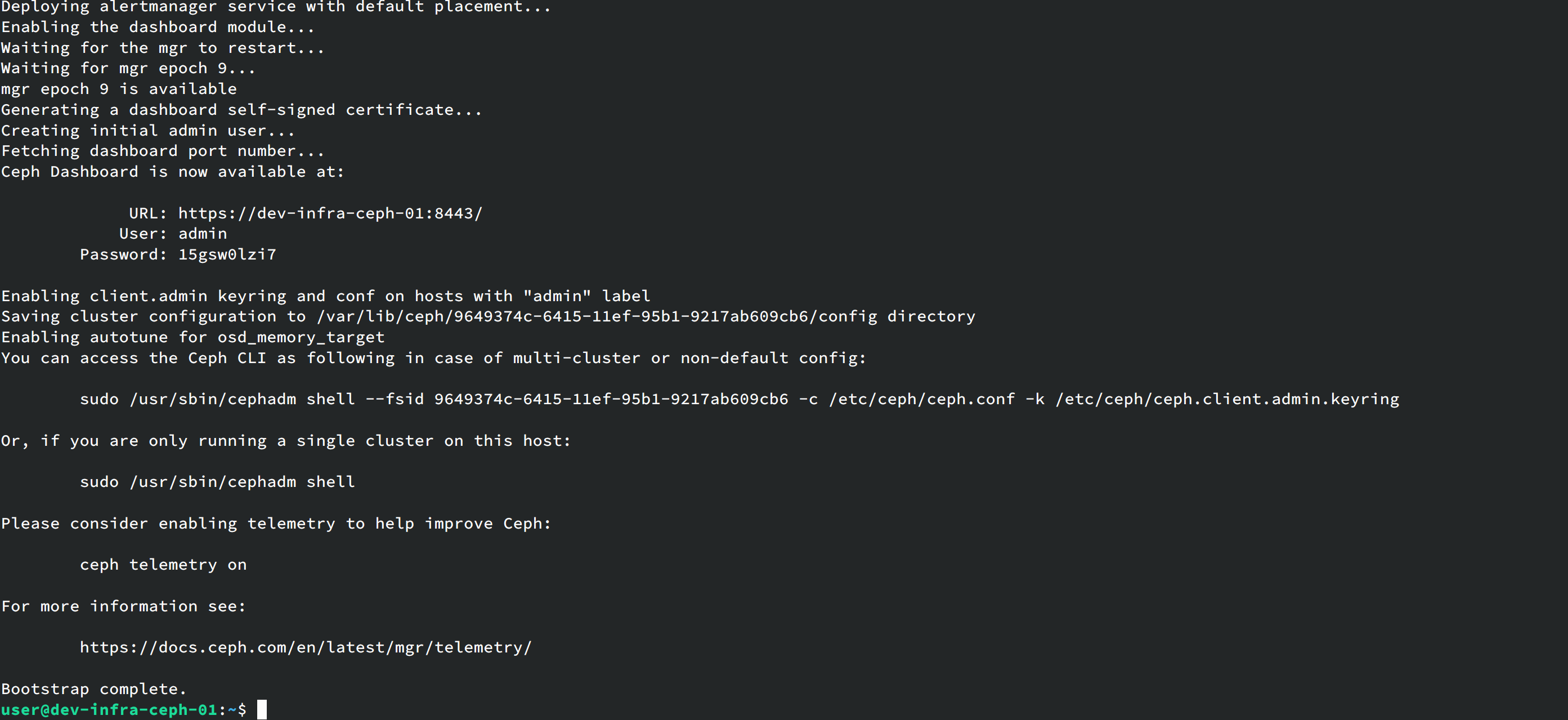

/usr/sbin/cephadm --docker bootstrap --mon-ip 192.168.122.50 --ssh-private-key /root/.ssh/id_rsa --ssh-public-key /root/.ssh/id_rsa.pub部署完成之后:

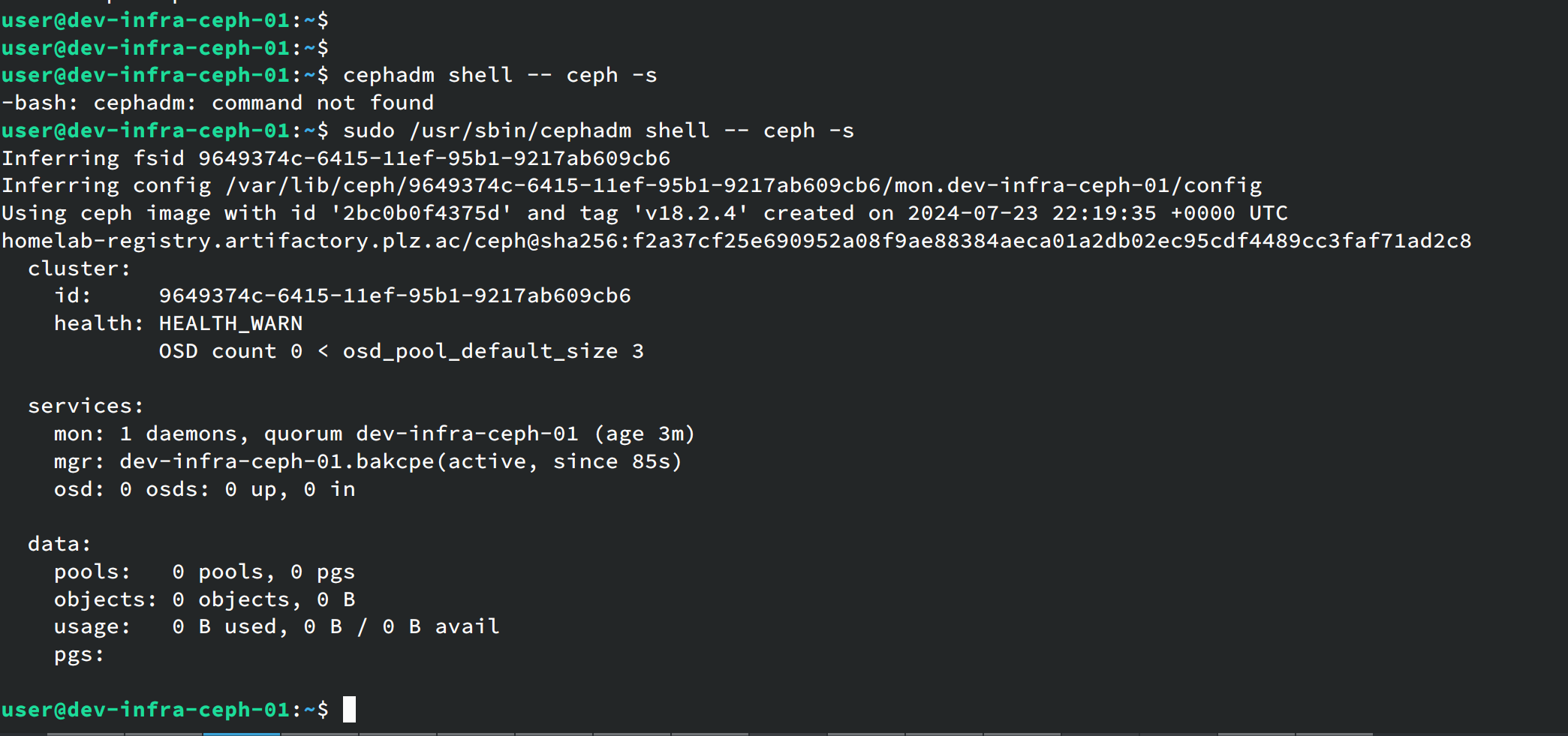

目前ceph的集群还是不可用的 可以通过这条命令来查看集群状态:

cephadm shell -- ceph -s

为了方便管理可以安装ceph的包:

cephadm add-repo --release reef

cephadm install ceph-common安装完成之后可以用这条命令来看ceph的版本:

ceph -vceph集群配置

查看当前的节点:

sudo ceph orch host ls初始化的时候我们只指定了一台机器,这里要把其他的两个节点都添加进去::

sudo ceph orch host add ceph-node2 192.168.56.51

sudo ceph orch host add ceph-node3 192.168.56.52给节点打标签:

ceph orch host label add ceph-node2 _admin

ceph orch host label add ceph-node3 _admin创建osd

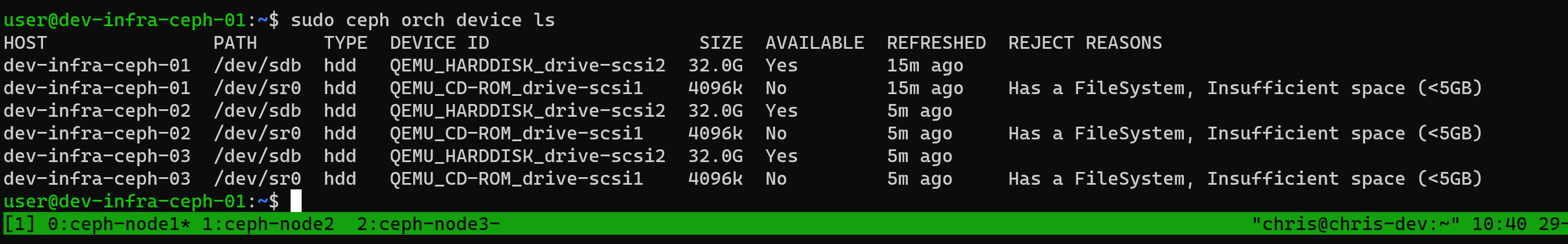

查看节点上的磁盘设备

ceph orch device ls

这里可以直接看到是哪些设备可以用,哪些设备不能用的

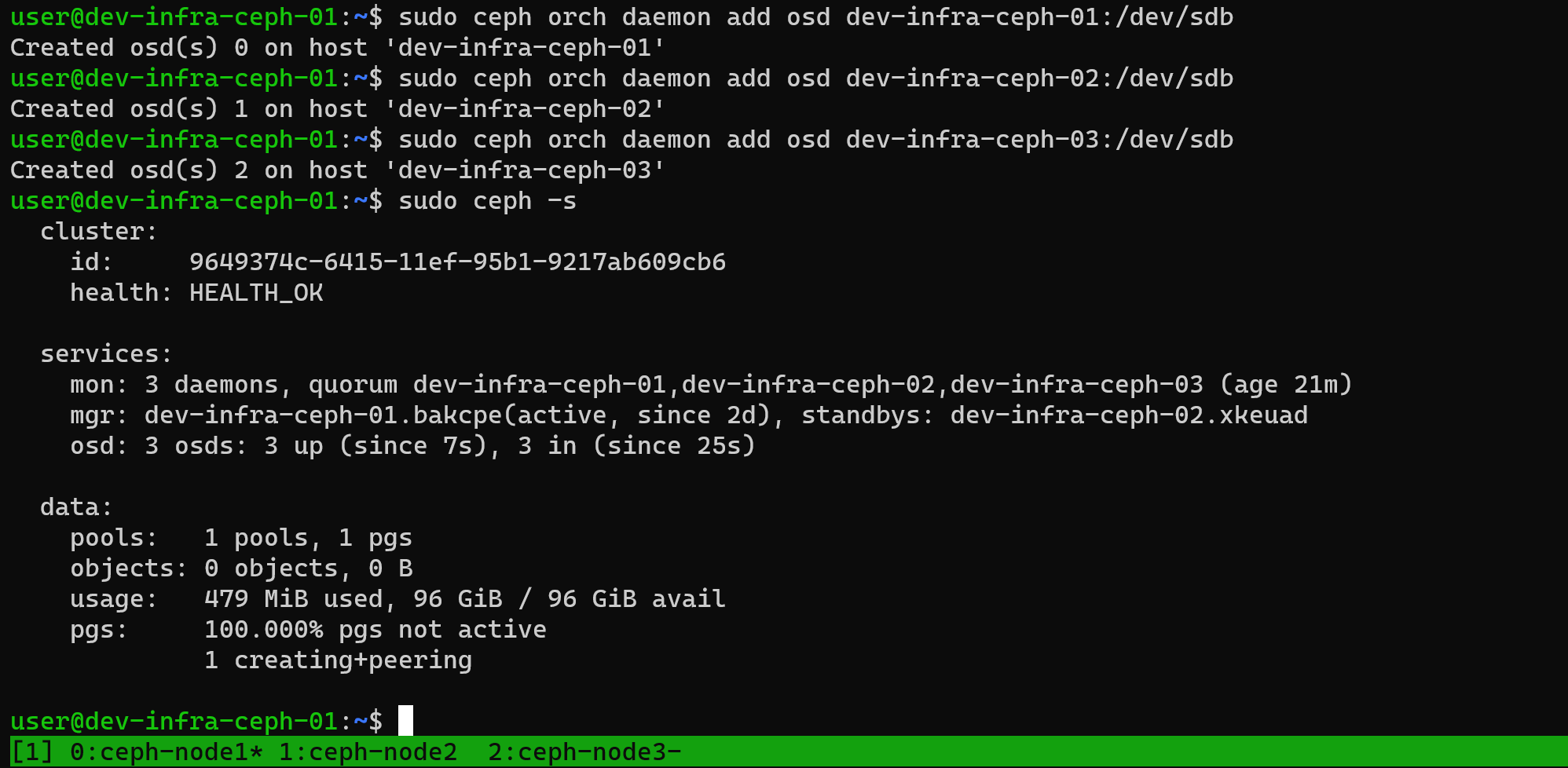

添加磁盘:

ceph orch daemon add osd ceph-node1:/dev/vdb

ceph orch daemon add osd ceph-node2:/dev/vdb

ceph orch daemon add osd ceph-node3:/dev/vdb添加完成之后再去查看对应的ceph状态,这个时候就可以看到集群的状态已经变成了健康的状态了:

对接pve

这里以rbd存储为例子

创建池子:

ceph osd pool create pve初始化:

rbd pool init pve创建授权:

ceph auth get-or-create client.pve mon 'profile rbd' osd 'profile rbd pool=pve'将打印出来的信息保存起来,后续加入pve时要用到。

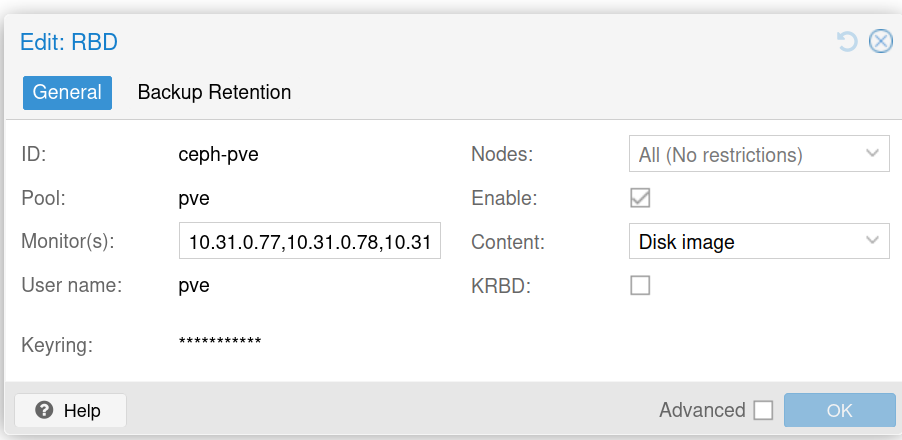

在pve集群那里添加rbd存储,具体配置如下:

keyring 就填写创建好的那个授权凭证。

对接k8s

创建池子:

ceph osd pool create kubernetes初始化:

rbd pool init kubernetes创建授权:

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'获取mon的信息:

ceph mon dump我们还需要创建一个namespace去跑ceph csi相关的服务

kubectl create ns ceph-csi生成对应的configmap:

cat <<EOF > csi-config-map.yaml

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "2778ca6a-6a9a-11ef-b561-aeb7f1c0facf",

"monitors": [

"192.168.56.50:6789",

"192.168.56.51:6789",

"192.168.56.52:6789"

]

}

]

metadata:

name: ceph-csi-config

namespace: ceph-csi

EOF应用:

kubectl apply -f csi-config-map.yamlKMS配置,这个可以根据需要去设置对应的KMS,这里只是测试环境就不设置了:

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

{}

metadata:

name: ceph-csi-encryption-kms-config

namespace: ceph-csi应用:

kubectl apply -f 02-kms-config.yaml03-ceph-config-map.yaml,这个是链接ceph的认证方式:

---

apiVersion: v1

kind: ConfigMap

data:

ceph.conf: |

[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# keyring is a required key and its value should be empty

keyring: |

metadata:

name: ceph-config

namespace: ceph-csi应用:

kubectl apply -f 03-ceph-config-map.yaml04-csi-rbd-secret.yaml这个就是存放创建的凭证部分:

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: ceph-csi

stringData:

userID: kubernetes

userKey: xxxx # 替换成你凭证的key应用:

kubectl apply -f 04-csi-rbd-secret.yaml05-csi-provisioner-rbac.yaml:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-provisioner

# replace with non-default namespace name

namespace: ceph-csi

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-external-provisioner-runner

namespace: ceph-csi

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims/status"]

verbs: ["update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list", "watch", "update", "patch", "create"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshots/status"]

verbs: ["get", "list", "patch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents"]

verbs: ["create", "get", "list", "watch", "update", "delete", "patch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments/status"]

verbs: ["patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["csinodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["snapshot.storage.k8s.io"]

resources: ["volumesnapshotcontents/status"]

verbs: ["update", "patch"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts/token"]

verbs: ["create"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotcontents"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["groupsnapshot.storage.k8s.io"]

resources: ["volumegroupsnapshotcontents/status"]

verbs: ["update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role

namespace: ceph-csi

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

# replace with non-default namespace name

namespace: ceph-csi

roleRef:

kind: ClusterRole

name: rbd-external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

# replace with non-default namespace name

namespace: ceph-csi

name: rbd-external-provisioner-cfg

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "watch", "create", "update", "delete"]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-provisioner-role-cfg

# replace with non-default namespace name

namespace: ceph-csi

subjects:

- kind: ServiceAccount

name: rbd-csi-provisioner

# replace with non-default namespace name

namespace: ceph-csi

roleRef:

kind: Role

name: rbd-external-provisioner-cfg

apiGroup: rbac.authorization.k8s.io应用:

kubectl apply -f 05-csi-provisioner-rbac.yaml06-csi-nodeplugin-rbac.yaml:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-csi-nodeplugin

# replace with non-default namespace name

namespace: ceph-csi

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

namespace: ceph-csi

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

# allow to read Vault Token and connection options from the Tenants namespace

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["get"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments"]

verbs: ["list", "get"]

- apiGroups: [""]

resources: ["serviceaccounts/token"]

verbs: ["create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-csi-nodeplugin

namespace: ceph-csi

subjects:

- kind: ServiceAccount

name: rbd-csi-nodeplugin

# replace with non-default namespace name

namespace: ceph-csi

roleRef:

kind: ClusterRole

name: rbd-csi-nodeplugin

apiGroup: rbac.authorization.k8s.io应用:

kubectl apply -f 06-csi-nodeplugin-rbac.yaml07-csi-rbdplugin-provisioner.yaml:

---

kind: Service

apiVersion: v1

metadata:

name: csi-rbdplugin-provisioner

# replace with non-default namespace name

namespace: ceph-csi

labels:

app: csi-metrics

spec:

selector:

app: csi-rbdplugin-provisioner

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: csi-rbdplugin-provisioner

# replace with non-default namespace name

namespace: ceph-csi

spec:

replicas: 3

selector:

matchLabels:

app: csi-rbdplugin-provisioner

template:

metadata:

labels:

app: csi-rbdplugin-provisioner

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- csi-rbdplugin-provisioner

topologyKey: "kubernetes.io/hostname"

serviceAccountName: rbd-csi-provisioner

priorityClassName: system-cluster-critical

containers:

- name: csi-rbdplugin

# for stable functionality replace canary with latest release version

image: harbor.plz.ac/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--type=rbd"

- "--controllerserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--csi-addons-endpoint=$(CSI_ADDONS_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--pidlimit=-1"

- "--rbdhardmaxclonedepth=8"

- "--rbdsoftmaxclonedepth=4"

- "--enableprofiling=false"

- "--setmetadata=true"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: CSI_ADDONS_ENDPOINT

value: unix:///csi/csi-addons.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: ceph-csi-encryption-kms-config

mountPath: /etc/ceph-csi-encryption-kms-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: ceph-config

mountPath: /etc/ceph/

- name: oidc-token

mountPath: /run/secrets/tokens

readOnly: true

- name: csi-provisioner

image: harbor.plz.ac/sig-storage/csi-provisioner:v5.0.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--retry-interval-start=500ms"

- "--leader-election=true"

- "--feature-gates=HonorPVReclaimPolicy=true"

- "--prevent-volume-mode-conversion=true"

# if fstype is not specified in storageclass, ext4 is default

- "--default-fstype=ext4"

- "--extra-create-metadata=true"

- "--immediate-topology=false"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-snapshotter

image: harbor.plz.ac/sig-storage/csi-snapshotter:v8.0.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--leader-election=true"

- "--extra-create-metadata=true"

- "--enable-volume-group-snapshots=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-attacher

image: harbor.plz.ac/sig-storage/csi-attacher:v4.6.1

args:

- "--v=1"

- "--csi-address=$(ADDRESS)"

- "--leader-election=true"

- "--retry-interval-start=500ms"

- "--default-fstype=ext4"

env:

- name: ADDRESS

value: /csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-resizer

image: harbor.plz.ac/sig-storage/csi-resizer:v1.11.1

args:

- "--csi-address=$(ADDRESS)"

- "--v=1"

- "--timeout=150s"

- "--leader-election"

- "--retry-interval-start=500ms"

- "--handle-volume-inuse-error=false"

- "--feature-gates=RecoverVolumeExpansionFailure=true"

env:

- name: ADDRESS

value: unix:///csi/csi-provisioner.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: csi-rbdplugin-controller

# for stable functionality replace canary with latest release version

image: harbor.plz.ac/cephcsi/cephcsi:canary

args:

- "--type=controller"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--drivernamespace=$(DRIVER_NAMESPACE)"

- "--setmetadata=true"

env:

- name: DRIVER_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: ceph-config

mountPath: /etc/ceph/

- name: liveness-prometheus

image: harbor.plz.ac/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi-provisioner.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: lib-modules

hostPath:

path: /lib/modules

- name: socket-dir

emptyDir: {

medium: "Memory"

}

- name: ceph-config

configMap:

name: ceph-config

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: ceph-csi-encryption-kms-config

configMap:

name: ceph-csi-encryption-kms-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

- name: oidc-token

projected:

sources:

- serviceAccountToken:

path: oidc-token

expirationSeconds: 3600

audience: ceph-csi-kms这个地方是要注意一下我实用的是内网的镜像仓库,你需要根据实际需要修改成你自己的仓库。

应用:

kubectl apply -f 07-csi-rbdplugin-provisioner.yaml08-csi-rbdplugin.yaml:

---

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: csi-rbdplugin

# replace with non-default namespace name

namespace: ceph-csi

spec:

selector:

matchLabels:

app: csi-rbdplugin

template:

metadata:

labels:

app: csi-rbdplugin

spec:

serviceAccountName: rbd-csi-nodeplugin

hostNetwork: true

hostPID: true

priorityClassName: system-node-critical

# to use e.g. Rook orchestrated cluster, and mons' FQDN is

# resolved through k8s service, set dns policy to cluster first

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: csi-rbdplugin

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

# for stable functionality replace canary with latest release version

image: harbor.plz.ac/cephcsi/cephcsi:canary

args:

- "--nodeid=$(NODE_ID)"

- "--pluginpath=/var/lib/kubelet/plugins"

- "--stagingpath=/var/lib/kubelet/plugins/kubernetes.io/csi/"

- "--type=rbd"

- "--nodeserver=true"

- "--endpoint=$(CSI_ENDPOINT)"

- "--csi-addons-endpoint=$(CSI_ADDONS_ENDPOINT)"

- "--v=5"

- "--drivername=rbd.csi.ceph.com"

- "--enableprofiling=false"

# If topology based provisioning is desired, configure required

# node labels representing the nodes topology domain

# and pass the label names below, for CSI to consume and advertise

# its equivalent topology domain

# - "--domainlabels=failure-domain/region,failure-domain/zone"

#

# Options to enable read affinity.

# If enabled Ceph CSI will fetch labels from kubernetes node and

# pass `read_from_replica=localize,crush_location=type:value` during

# rbd map command. refer:

# https://docs.ceph.com/en/latest/man/8/rbd/#kernel-rbd-krbd-options

# for more details.

# - "--enable-read-affinity=true"

# - "--crush-location-labels=topology.io/zone,topology.io/rack"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# - name: KMS_CONFIGMAP_NAME

# value: encryptionConfig

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: CSI_ADDONS_ENDPOINT

value: unix:///csi/csi-addons.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /csi

- mountPath: /dev

name: host-dev

- mountPath: /sys

name: host-sys

- mountPath: /run/mount

name: host-mount

- mountPath: /etc/selinux

name: etc-selinux

readOnly: true

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- name: ceph-csi-config

mountPath: /etc/ceph-csi-config/

- name: ceph-csi-encryption-kms-config

mountPath: /etc/ceph-csi-encryption-kms-config/

- name: plugin-dir

mountPath: /var/lib/kubelet/plugins

mountPropagation: "Bidirectional"

- name: mountpoint-dir

mountPath: /var/lib/kubelet/pods

mountPropagation: "Bidirectional"

- name: keys-tmp-dir

mountPath: /tmp/csi/keys

- name: ceph-logdir

mountPath: /var/log/ceph

- name: ceph-config

mountPath: /etc/ceph/

- name: oidc-token

mountPath: /run/secrets/tokens

readOnly: true

- name: driver-registrar

# This is necessary only for systems with SELinux, where

# non-privileged sidecar containers cannot access unix domain socket

# created by privileged CSI driver container.

securityContext:

privileged: true

allowPrivilegeEscalation: true

image: harbor.plz.ac/sig-storage/csi-node-driver-registrar:v2.11.1

args:

- "--v=1"

- "--csi-address=/csi/csi.sock"

- "--kubelet-registration-path=/var/lib/kubelet/plugins/rbd.csi.ceph.com/csi.sock"

env:

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: socket-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

- name: liveness-prometheus

securityContext:

privileged: true

allowPrivilegeEscalation: true

image: harbor.plz.ac/cephcsi/cephcsi:canary

args:

- "--type=liveness"

- "--endpoint=$(CSI_ENDPOINT)"

- "--metricsport=8680"

- "--metricspath=/metrics"

- "--polltime=60s"

- "--timeout=3s"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: socket-dir

mountPath: /csi

imagePullPolicy: "IfNotPresent"

volumes:

- name: socket-dir

hostPath:

path: /var/lib/kubelet/plugins/rbd.csi.ceph.com

type: DirectoryOrCreate

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins

type: Directory

- name: mountpoint-dir

hostPath:

path: /var/lib/kubelet/pods

type: DirectoryOrCreate

- name: ceph-logdir

hostPath:

path: /var/log/ceph

type: DirectoryOrCreate

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: Directory

- name: host-dev

hostPath:

path: /dev

- name: host-sys

hostPath:

path: /sys

- name: etc-selinux

hostPath:

path: /etc/selinux

- name: host-mount

hostPath:

path: /run/mount

- name: lib-modules

hostPath:

path: /lib/modules

- name: ceph-config

configMap:

name: ceph-config

- name: ceph-csi-config

configMap:

name: ceph-csi-config

- name: ceph-csi-encryption-kms-config

configMap:

name: ceph-csi-encryption-kms-config

- name: keys-tmp-dir

emptyDir: {

medium: "Memory"

}

- name: oidc-token

projected:

sources:

- serviceAccountToken:

path: oidc-token

expirationSeconds: 3600

audience: ceph-csi-kms

---

# This is a service to expose the liveness metrics

apiVersion: v1

kind: Service

metadata:

name: csi-metrics-rbdplugin

# replace with non-default namespace name

namespace: ceph-csi

labels:

app: csi-metrics

spec:

ports:

- name: http-metrics

port: 8080

protocol: TCP

targetPort: 8680

selector:

app: csi-rbdplugin这里同样是要注意镜像部分的配置,我这里用的是内网的仓库。

kubectl apply -f 08-csi-rbdplugin.yaml09-csi-rbd-sc.yaml:

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 2778ca6a-6a9a-11ef-b561-aeb7f1c0facf

pool: kubernetes

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: ceph-csi

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: ceph-csi

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: ceph-csi

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard应用:

kubectl apply -f 09-csi-rbd-sc.yaml到这里ceph的csi就已经部署完成了,可以创建一个examples的pod来测试一下:

---

apiVersion: v1

kind: Pod

metadata:

name: pod-with-raw-block-volume

spec:

containers:

- name: fc-container

image: harbor.plz.ac/library/fedora

command: ["/bin/sh", "-c"]

args: ["tail -f /dev/null"]

volumeDevices:

- name: data

devicePath: /dev/xvda

volumes:

- name: data

persistentVolumeClaim:

claimName: raw-block-pvc这个也要注意下镜像地址,我这里用的是内网的镜像地址。

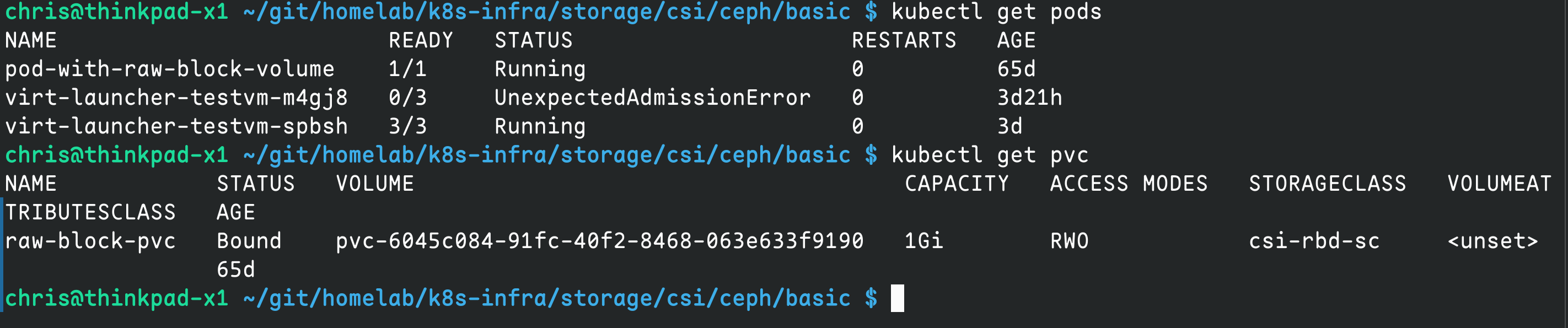

kubectl apply -f 11-raw-block-pod.yaml查看对应的状态:

kubectl get pods

kubectl get pvc

如果有需要设置为默认的storage class可以执行:

kubectl patch storageclass csi-rbd-sc -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'Rados

ceph提供了对象存储简单的配置即可使用。

处于高可用以及安全考虑建议是单独部署一个LB用于负载均衡和安全,我这里是用了一个nginx当然你也可以用其他的服务用于负载均衡。

首先要配置一下rados,写一个配置文件内容如下:

service_type: rgw

service_id: homelab

placement:

count: 3

spec:

rgw_frontend_port: 7480这里是配置了三个节点都有rgw以及对应的端口是7480。

应用配置:

sudo ceph orch apply -i homelab.yaml应用完成之后大概等1分钟之后查看端口是否已经bind好了:

sudo ss -nlp |grep 7480如果启动了就说明RGW已经正常启动了。

接下来开始配置nginx,这里单独给了一个域名是 s3.infra.plz.ac

upstream ceph_rgw {

server 10.31.0.77:7480;

server 10.31.0.78:7480;

server 10.31.0.79:7480;

}

server {

listen 443 ssl;

server_name s3.infra.plz.ac;

ssl_certificate /etc/nginx/ssl/s3.infra.plz.ac.pem;

ssl_certificate_key /etc/nginx/ssl/s3.infra.plz.ac.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers HIGH:!aNULL:!MD5;

location / {

proxy_pass http://ceph_rgw;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Authorization $http_authorization;

proxy_http_version 1.1;

proxy_request_buffering off;

client_max_body_size 5G;

}

}

server {

listen 80;

server_name s3.infra.plz.ac;

return 301 https://$host$request_uri;

}测试和重载nginx:

nginx -t && nginx -s reload创建一个用户:

sudo radosgw-admin user create --uid=infra --display-name="Infra" --email=[email protected]记录下来对应的AK&SK,这里用s3cmd去测试是否可以正常使用:

sudo apt-get install s3cmd -y配置s3cmd

vi ~/.s3cfg内容如下:

[default]

access_key =

secret_key =

host_base = s3.infra.plz.ac

host_bucket = %(bucket).s3.infra.plz.ac

use_https = True

测试:

s3cmd mb s3://demo # 创建bucket

echo hello > hello.txt

s3cmd put hello.txt s3://demo # 拷贝文件到bucket

s3cmd get s3://demo/hello.txt # 下载文件到本地

s3cmd del s3://demo --force --recursive #删除里面的所有文件

s3cmd rb s3://demo # 删除bucket监控

默认情况下已经安装好了监控了,可以直接访问ceph的grafana

https://ip:3000 进行使用

如果你觉得这篇文章对你有所帮助,欢迎赞赏~

赞赏