在 Homelab 中用 Gitea 自动化构建和部署 Hugo:从构建到 GitOps 的实践之路

今天这期文章会以当前的博客为例子,如何在homelab中使用gitea实现自动化构建以及部署。

why hugo

- 构建快、生成静态 HTML、易于部署

- 支持 Markdown 写作、支持主题与模块化扩展

- 对于 Homelab 博客来说简单高效、维护成本低

部署hugo的各种姿势

- 静态文件 + Nginx

- 使用 GitHub action 自动构建+自动部署到GitHub pages

- 自动化构建 + 镜像打包 + 部署到 K8s(本次实践)

环境准备

环境涉及到的服务以及域名如下:

| 域名 | 服务 | 说明 |

|---|---|---|

| mirrors.infra.plz.ac | nginx | 离线镜像站 |

| harbor.infra.plz.ac | harbor | 离线镜像仓库 |

| git.infra.plz.ac | gitea | git代码仓库 |

| blog.app.plz.ac | hugo | hugo 实验站点 |

| cd.infra.plz.ac | argocd | argocd 服务用于部署应用 |

| auth.infra.plz.ac | authentik | homelab sso |

| s3.infra.plz.ac | Ceph RGW | s3存储 |

| grafana.infra.plz.ac | grafana | 用于查看日志以及服务监控 |

除此之外还会用到docker的镜像站,如下:

| fqdn | 源站 | 目的 | 备注 |

|---|---|---|---|

| docker-proxy.plz.ac | docker.io | 加速docker hub的镜像 | |

| ghcr.plz.ac | ghcr.io | 加速github的镜像 | |

| k8s-io.plz.ac | k8s.io | k8s的镜像仓库 | |

| quay.plz.ac | quay.io | quay仓库 |

k8s 集群

这里我准备了8台虚拟机来作为此次部署的实际节点,具体部署可以参考上篇文章使用Kubespray离线部署kubernetes

| name | role | desc |

|---|---|---|

| infra-master-1 | master | |

| infra-master-2 | master | |

| infra-master-3 | master | |

| infra-worker-1 | worker | |

| infra-worker-2 | worker | |

| infra-worker-3 | worker | |

| infra-worker-4 | worker | |

| infra-worker-5 | worker |

集群已经部署好了ingress-nginx、cert-manager、metallb,为了申请证书我这里还配置了一个名为cloudflare的cluster-issuer。

metallb这里用到了两个ip:

- 10.31.0.252 # 用于dns解析使用的ip

- 10.31.0.253 # 用于各个ingress lb ip

具体的配置如下:

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default-pool

namespace: metallb

spec:

addresses:

- 10.31.0.253/32

- 10.31.0.252/32

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default-pool

namespace: metallb

spec:

ipAddressPools:

- default-pool为了确保后续的服务可以正常调用我这里还部署了单独的etcd+coredns+externaldns组合来实现内部 DNS 解析。

对应的部署文件如下:

etcd-vaules.yaml

global:

security:

allowInsecureImages: true

image:

registry: docker-proxy.plz.ac

clusterDomain: infra.homelab

auth:

rbac:

enabled: false

create: falsecoredns-vaules.yaml

这里因为我的网络问题要在dns层面这里给ipv6禁用掉不然会有各种各样的解析问题。

image:

repository: docker-proxy.plz.ac/coredns/coredns

serviceType: LoadBalancer

service:

loadBalancerIP: 10.31.0.252

servers:

- zones:

- zone: .

port: 53

# -- expose the service on a different port

# servicePort: 5353

# If serviceType is nodePort you can specify nodePort here

# nodePort: 30053

# hostPort: 53

plugins:

- name: errors

# Serves a /health endpoint on :8080, required for livenessProbe

- name: health

configBlock: |-

lameduck 10s

# Serves a /ready endpoint on :8181, required for readinessProbe

- name: ready

# Required to query kubernetes API for data

- name: kubernetes

parameters: infra.homelab in-addr.arpa

configBlock: |-

pods insecure

fallthrough in-addr.arpa

ttl 30

- name: template # 使用 template 插件

parameters: ANY AAAA # 匹配所有 AAAA 类型的查询

configBlock: |-

rcode NXDOMAIN # 返回 NXDOMAIN 响应码,表示不存在此类型的记录

- name: etcd

configBlock: |-

endpoint http://etcd.dns.svc.infra.homelab:2379

path /skydns

fallthrough

# Serves a /metrics endpoint on :9153, required for serviceMonitor

- name: prometheus

parameters: 0.0.0.0:9153

- name: forward

parameters: . 1.1.1.1 8.8.8.8

- name: cache

parameters: 30

- name: loop

- name: reload

- name: loadbalanceexternal-dns-values.yaml

global:

security:

allowInsecureImages: true

image:

registry: docker-proxy.plz.ac

provider: coredns

policy: upsert-only

txtOwnerId: "homelab"

extraEnvVars:

- name: ETCD_URLS

value: "http://etcd.dns.svc.infra.homelab:2379"

serviceAccount:

create: true

name: "external-dns"

ingressClassFilters:

- nginx镜像仓库

镜像仓库此次是使用的harbor,使用helm charts部署到当前的k8s集群中。

部署的vaules.yaml如下:

expose:

type: ingress

ingress:

hosts:

core: harbor.infra.plz.ac

className: nginx

annotations:

# note different ingress controllers may require a different ssl-redirect annotation

# for Envoy, use ingress.kubernetes.io/force-ssl-redirect: "true" and remove the nginx lines below

ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

cert-manager.io/cluster-issuer: "cloudflare"

externalURL: https://harbor.infra.plz.ac

nginx:

image:

repository: docker-proxy.plz.ac/goharbor/nginx-photon

portal:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-portal

core:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-core

jobservice:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-jobservice

registry:

registry:

image:

repository: docker-proxy.plz.ac/goharbor/registry-photon

controller:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-registryctl

trivy:

image:

# repository the repository for Trivy adapter image

repository: docker-proxy.plz.ac/goharbor/trivy-adapter-photon

database:

internal:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-db

redis:

internal:

image:

repository: docker-proxy.plz.ac/goharbor/redis-photon

exporter:

image:

repository: docker-proxy.plz.ac/goharbor/harbor-exporterharbor部署完成之后还需要创建如下镜像仓库:

- act (公开)

- slchris (私密)用于交付hugo内网站点

基础的docker镜像用于hugo服:

docker pull nginx

docker tag docker.io/library/nginx:latest harbor.infra.plz.ac/library/nginx

docker push harbor.infra.plz.ac/library/nginxmirrors服务

mirrors服务提供了必要的文件服务在后续的gitea action会用到,其部署文件如下:

---

apiVersion: v1

kind: ConfigMap

metadata:

name: default-conf

data:

default.conf: |

server {

listen 80;

server_name localhost;

#access_log /var/log/nginx/host.access.log main;

error_log /var/log/nginx/mirrors_error.log;

access_log /var/log/nginx/mirrors_access.log;

location / {

autoindex on;

autoindex_exact_size off;

autoindex_localtime on;

root /usr/share/nginx/html;

index index.html index.htm;

}

location ^~ /debian/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/debian/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /debian-nonfree/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/debian-nonfree/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /debian-security/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/debian-security/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /docker-ce/ {

proxy_pass https://mirrors.ustc.edu.cn/docker-ce/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /ceph/ {

proxy_pass https://mirrors.ustc.edu.cn/ceph/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /elasticstack/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/elasticstack/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /mongodb/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/mongodb/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /gitlab-ce/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/gitlab-ce/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /gitlab-runner/ {

proxy_pass https://mirrors.tuna.tsinghua.edu.cn/gitlab-runner/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /fedora/ {

proxy_pass https://mirrors.ustc.edu.cn/fedora/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /jenkins/ {

proxy_pass https://mirrors.ustc.edu.cn/jenkins/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /percona/ {

proxy_pass https://mirrors.ustc.edu.cn/percona/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /proxmox/ {

proxy_pass https://mirrors.ustc.edu.cn/proxmox/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /pypi/ {

proxy_pass https://mirrors.ustc.edu.cn/pypi/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /alpine/ {

proxy_pass https://mirrors.ustc.edu.cn/alpine/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

location ^~ /openresty/ {

proxy_pass https://mirrors.ustc.edu.cn/openresty/;

proxy_ssl_server_name on;

proxy_set_header Host mirrors.ustc.edu.cn;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mirrors-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: csi-rbd-sc

resources:

requests:

storage: 100Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mirrors-nginx

namespace: default

spec:

selector:

matchLabels:

app: mirrors-nginx

template:

metadata:

labels:

app: mirrors-nginx

spec:

containers:

- name: mirrors-nginx

image: docker-proxy.plz.ac/library/nginx

resources:

limits:

memory: "128Mi"

cpu: "500m"

ports:

- containerPort: 80

volumeMounts:

- name: mirrors-nginx

mountPath: /usr/share/nginx/html

- name: default-conf

mountPath: /etc/nginx/conf.d

volumes:

- name: mirrors-nginx

persistentVolumeClaim:

claimName: mirrors-pvc

- name: default-conf

configMap:

name: default-conf

items:

- key: default.conf

path: default.conf

---

apiVersion: v1

kind: Service

metadata:

name: mirrors-nginx

spec:

selector:

app: mirrors-nginx

ports:

- port: 80

targetPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: mirrors-nginx

labels:

name: mirrors-nginx

annotations:

cert-manager.io/cluster-issuer: "cloudflare"

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

spec:

ingressClassName: nginx

rules:

- host: mirrors.infra.plz.ac

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: mirrors-nginx

port:

number: 80

tls:

- hosts:

- mirrors.infra.plz.ac

secretName: mirrors-nginx-tls在部署完成之后再去进入pod去上传好特定的资源比如说:

- docker部署脚本

- kubectl的二进制文件

- hugo的二进制文件

gitea 服务器

gitea服务这里也是使用的helm charts去部署:

global:

imageRegistry: "docker-proxy.plz.ac"

security:

allowInsecureImages: true

persistence:

enabled: true

size: 100Gi

ingress:

enabled: true

hostname: git.infra.plz.ac

tls:

- hosts:

- git.infra.plz.ac

secretName: gitea-tls

annotations:

cert-manager.io/cluster-issuer: "cloudflare"

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "1000m"

ingressClassName: nginx

adminUsername: admin

adminPassword: "RZdd8rARozmE71tc"

adminEmail: [email protected]

appName: VC-gitea

rootURL: "https://git.infra.plz.ac"gitea action

gitea action 这个地方直接用helm charts部署是有问题的经过测试发现单独搞个虚拟机部署是能正常对接到当前的gitea服务器,暂时就用单独部署的方式来。

我这里是准备了一台4c8g60g的虚拟机来进行部署。

首先要在节点上安装docker:

export DOWNLOAD_URL="https://mirrors.infra.plz.ac/docker-ce"

curl https://mirrors.infra.plz.ac/docker/install.sh | bash 下载act https://gitea.com/gitea/act_runner/releases

wget -c https://gitea.com/gitea/act_runner/releases/download/v0.2.12/act_runner-0.2.12-linux-amd64

chmod +x act_runner-0.2.12-linux-amd64

mv act_runner-0.2.12-linux-amd64 act_runner配置act:

./act_runner generate-config还需要将公网的镜像push到内网的harbor:

docker login harbor.infra.plz.ac

docker pull docker.gitea.com/runner-images:ubuntu-latest

docker tag docker.gitea.com/runner-images:ubuntu-latest harbor.infra.plz.ac/act/runner-images:ubuntu-latest

docker push harbor.infra.plz.ac/act/runner-images:ubuntu-latest

docker pull docker.gitea.com/runner-images:ubuntu-22.04

docker tag docker.gitea.com/runner-images:ubuntu-22.04 harbor.infra.plz.ac/act/runner-images:ubuntu-22.04

docker push harbor.infra.plz.ac/act/runner-images:ubuntu-22.04

docker pull docker.gitea.com/runner-images:ubuntu-20.04

docker tag docker.gitea.com/runner-images:ubuntu-20.04 harbor.infra.plz.ac/act/runner-images:ubuntu-20.04

docker push harbor.infra.plz.ac/act/runner-images:ubuntu-20.04 对应的配置要修改一下,vi config.yaml:

labels:

- "ubuntu-latest:docker://harbor.infra.plz.ac/act/runner-images:ubuntu-latest"

- "ubuntu-22.04:docker://harbor.infra.plz.ac/act/runner-images:ubuntu-22.04"

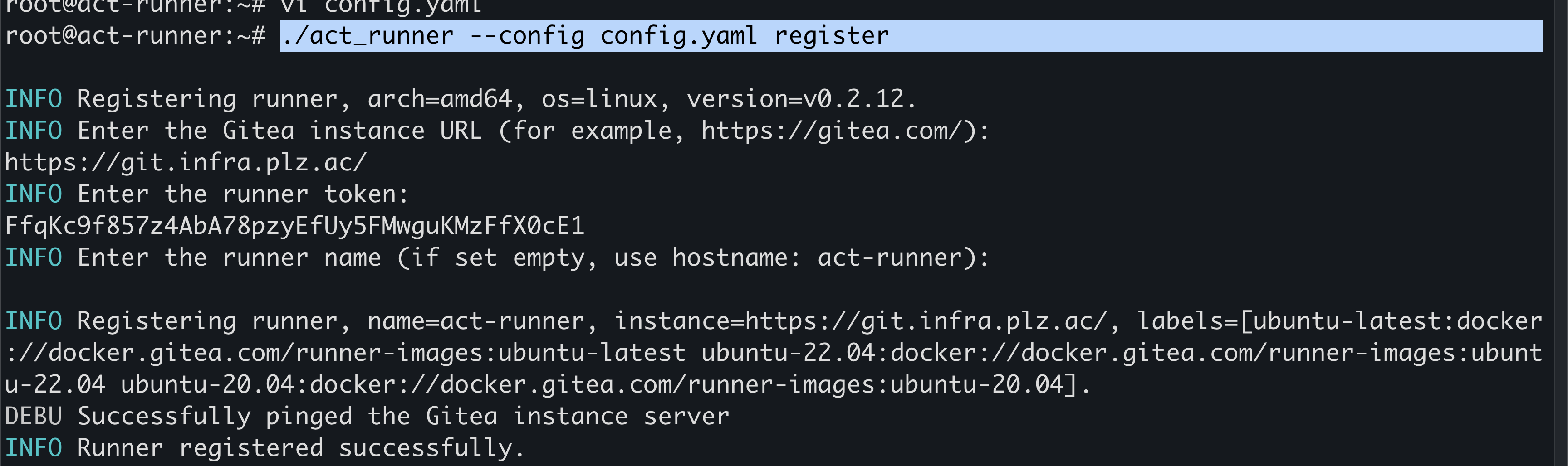

- "ubuntu-20.04:docker://harbor.infra.plz.ac/act/runner-images:ubuntu-20.04"注册到gitea,这里会提示需要输入runner的token这个是可以在gitea的Admin Settings -> Action -> Runners -> Create nes Runners获取到token:

./act_runner --config config.yaml register

接下来还需要配置systemd用于管理服务,出于安全考虑还需要创建一个单独的用户来跑这个服务:

mkdir -pv /etc/act_runner/

mv act_runner /usr/local/bin/act_runner

mv config.yaml /etc/act_runner/config.yaml

useradd act_runner -m -s /sbin/nologin

chown -R act_runner:act_runner /etc/act_runner

mkdir -pv /var/lib/act_runner

mv .runner /var/lib/act_runner

chown -R act_runner:act_runner /var/lib/act_runner

usermod -aG docker act_runner创建对应的services文件:

vi /etc/systemd/system/act_runner.service内容如下:

[Unit]

Description=Gitea Actions runner

Documentation=https://gitea.com/gitea/act_runner

After=docker.service

[Service]

ExecStart=/usr/local/bin/act_runner daemon --config /etc/act_runner/config.yaml

ExecReload=/bin/kill -s HUP $MAINPID

WorkingDirectory=/var/lib/act_runner

TimeoutSec=0

RestartSec=10

Restart=always

User=act_runner

[Install]

WantedBy=multi-user.target加入开机启动并启动:

systemctl daemon-reload

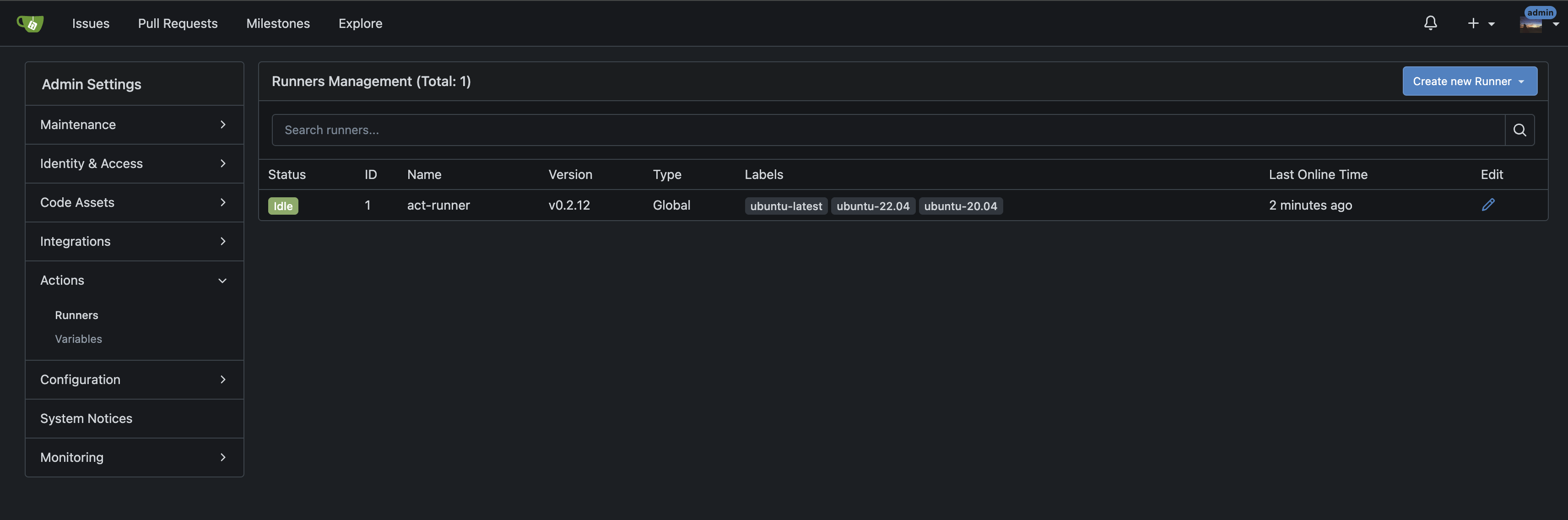

systemctl enable act_runner --now验证服务状态,可以在Admin Settings -> Action -> Runners查看具体的状态:

hugo构建和打包流程

hugo的构建实际上是非常简单的:

- 拉去到对应的代码仓库

- 更新submodules

- hugo生成对应的静态文件

- 使用Docker打包成镜像

- 推送到harbor

gitea实际上是和GitHub很类似的,像是GitHub的actions是可以直接拿来用的,不过要考虑到网络原因可能没办法下载成功。

我这里是将常用的checkout 同步了一份到本地写的时候要稍微注意下,这里是一个例子.gitea/workflows/build.yaml

name: Build Hugo Site

on:

push:

branches:

- mbp

jobs:

build:

name: Build Hugo Site

# build agent

runs-on: ubuntu-latest

steps:

- uses: https://git.infra.plz.ac/actions/checkout@releases/v4.0.0

- name: Setup Hugo

run: |

wget -c https://mirrors.infra.plz.ac/bin/hugo/v0.147.9/hugo_extended_0.147.9_linux-amd64.tar.gz

tar -xf hugo_extended_0.147.9_linux-amd64.tar.gz

mv hugo /usr/local/bin

hugo version

rm -rf hugo_extended_0.147.9_linux-amd64.tar.gz

# build site

- name: Build Hugo Site

run: |

git submodule update --init

hugo --minify

- name: Set SHORT_SHA

run: echo "SHORT_SHA=$(echo ${{ gitea.sha }} | cut -c1-8)" >> $GITHUB_ENV

- name: Login to Harbor

run: |

echo "${{ secrets.REGISTRY_PASSWORD }}" | docker login harbor.infra.plz.ac -u "${{ secrets.REGISTRY_USERNAME }}" --password-stdin

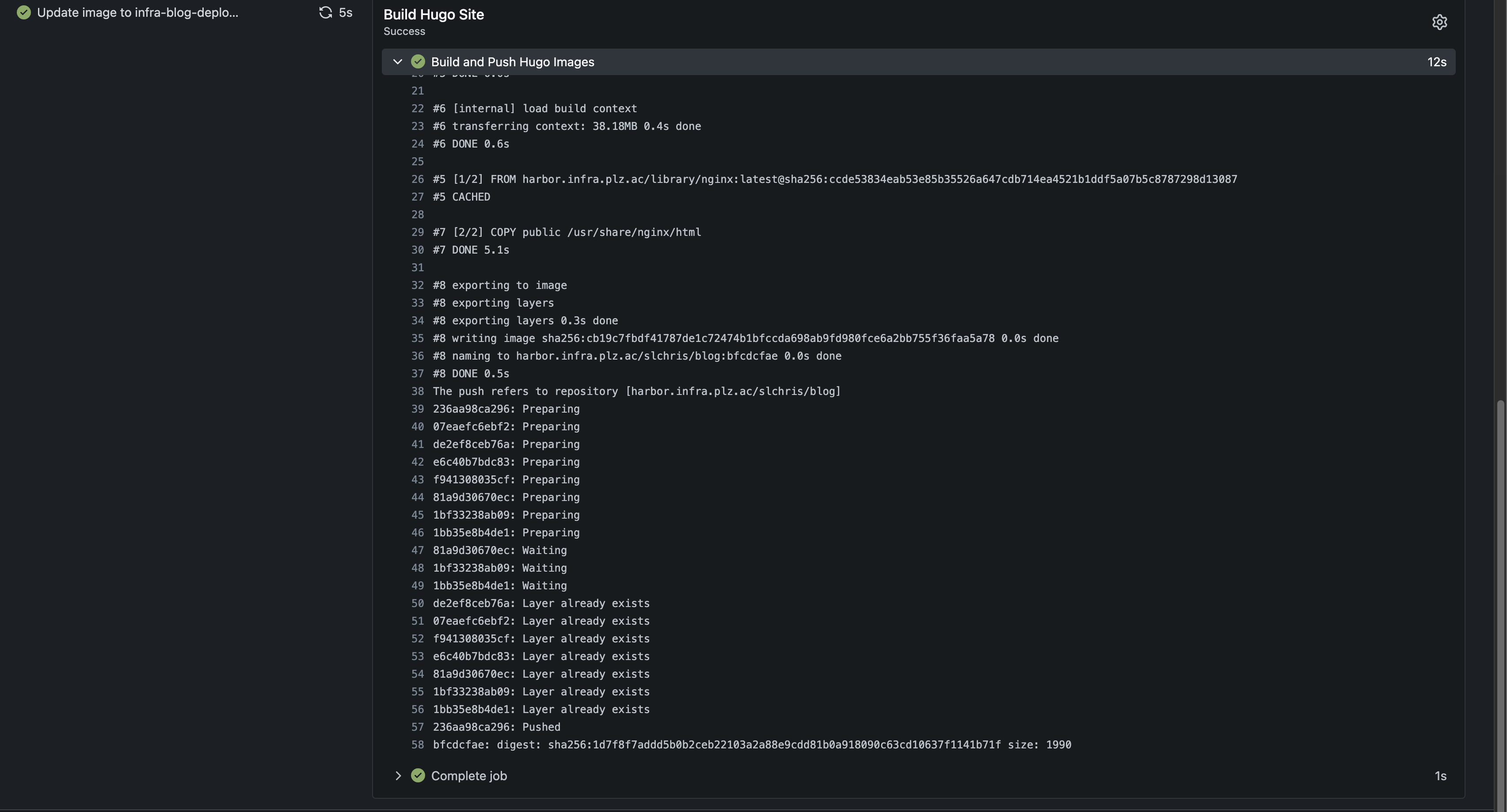

- name: Build and Push Hugo Images

run: |

docker build -t harbor.infra.plz.ac/slchris/blog:${SHORT_SHA} .

docker push harbor.infra.plz.ac/slchris/blog:${SHORT_SHA}这里有几个要注意的点:

- checkout要做离线处理

- 仓库涉及到的所有submodules都要做离线处理

- gitea runner要配置好对应的secrets,如harbor的账号和密码

- Dockerfile涉及到的镜像也需要进行离线处理。

我这里Dockerfile是直接丢在仓库的根目录的内容如下:

# hugo dockerfiles

FROM harbor.infra.plz.ac/library/nginx

LABEL maintainer="Chris Su <[email protected]>"

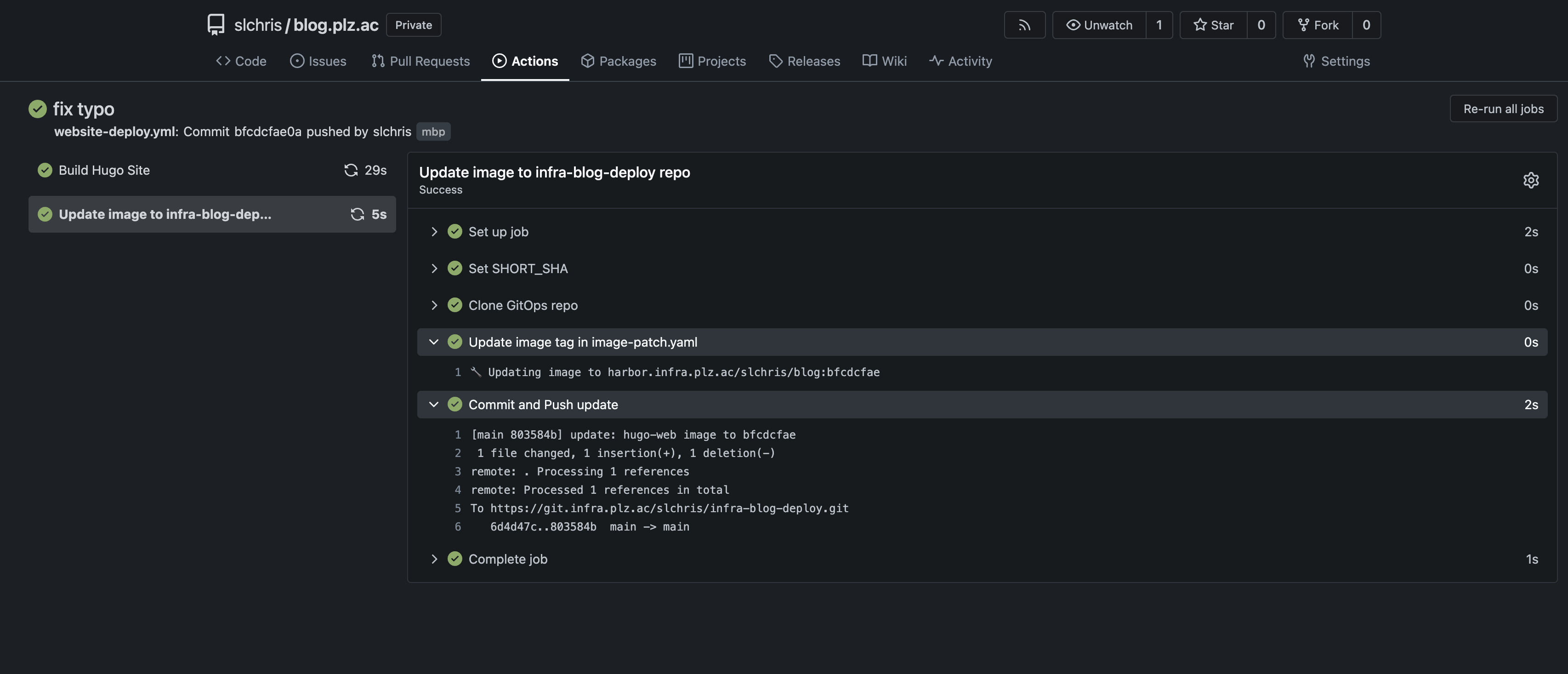

COPY public /usr/share/nginx/html这里是设置在push到特定分支如mbp分支之后才会触发,等到部署完成就可以在输出的结果中看到对应的信息:

到这里就实现了如何构建好hugo镜像以及push到harbor仓库里了。

如何部署到k8s

为了能够部署到k8s我这里准备了好了manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hugo-web

namespace: blog

spec:

replicas: 1

selector:

matchLabels:

app: hugo-web

template:

metadata:

labels:

app: hugo-web

spec:

imagePullSecrets:

- name: harbor-cred

containers:

- name: hugo-web

image: harbor.infra.plz.ac/slchris/blog:bba3776f

ports:

- containerPort: 80

resources:

limits:

cpu: "500m"

memory: "256Mi"

requests:

cpu: "100m"

memory: "128Mi"

---

apiVersion: v1

kind: Service

metadata:

name: hugo-web

namespace: blog

spec:

selector:

app: hugo-web

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hugo-web

namespace: blog

annotations:

cert-manager.io/cluster-issuer: "cloudflare"

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

spec:

ingressClassName: nginx

tls:

- hosts:

- blog.app.plz.ac

secretName: hugo-web-tls

rules:

- host: blog.app.plz.ac

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: hugo-web

port:

number: 80创建namespace:

kubectl create ns blog创建imagePullSecrets:

kubectl create secret docker-registry harbor-cred --docker-server=harbor.infra.plz.ac --docker-username=admin --docker-password=Harbor12345 -n blog应用:

kubectl apply -f blog.yaml那么接下来的问题就是在gitea action触发构建之后将新的镜像部署到k8s集群中,这里我们来一个非常简单的workflows:

name: Build and deploy Hugo Site

on:

push:

branches:

- mbp

jobs:

build:

name: Build Hugo Site

# build agent

runs-on: ubuntu-latest

steps:

- uses: https://git.infra.plz.ac/actions/checkout@releases/v4.0.0

- name: Setup Hugo

run: |

wget -c https://mirrors.infra.plz.ac/bin/hugo/v0.147.9/hugo_extended_0.147.9_linux-amd64.tar.gz

tar -xf hugo_extended_0.147.9_linux-amd64.tar.gz

mv hugo /usr/local/bin

hugo version

rm -rf hugo_extended_0.147.9_linux-amd64.tar.gz

# build site

- name: Build Hugo Site

run: |

git submodule update --init

hugo --minify

- name: Set SHORT_SHA

run: echo "SHORT_SHA=$(echo ${{ gitea.sha }} | cut -c1-8)" >> $GITHUB_ENV

- name: Login to Harbor

run: |

echo "${{ secrets.REGISTRY_PASSWORD }}" | docker login harbor.infra.plz.ac -u "${{ secrets.REGISTRY_USERNAME }}" --password-stdin

- name: Build and Push Hugo Images

run: |

docker build -t harbor.infra.plz.ac/slchris/blog:${SHORT_SHA} .

docker push harbor.infra.plz.ac/slchris/blog:${SHORT_SHA}

deploy:

name: Deploy to Kubernetes

runs-on: ubuntu-latest

needs: build

steps:

- name: Decode kubeconfig

run: |

echo "${{ secrets.KUBECONFIG_B64 }}" | base64 -d > kubeconfig

env:

KUBECONFIG_B64: ${{ secrets.KUBECONFIG_B64 }}

- name: Set KUBECONFIG env

run: |

echo "KUBECONFIG=$PWD/kubeconfig" >> $

- name: Install kubectl command

run: |

wget -c https://mirrors.infra.plz.ac/k8s/dl.k8s.io/release/v1.32.5/bin/linux/amd64/kubectl

chmod +x kubectl

mv kubectl /usr/local/bin

kubectl

- name: Update Deployment Image

run: |

kubectl --kubeconfig=$PWD/kubeconfig -n blog set image deployment/hugo-web hugo-web=harbor.infra.p

lz.ac/slchris/blog:${{ env.SHORT_SHA }}这里要注意的是KUBECONFIG_B64是经过base64加密之后的内容,默认的情况下是不能给kubeconfig直接拿来用的要去修改其server地址为gitea action能够访问到的地址才行。

要给这个kubeconfig的ip修改成实际可以连接到的ip不然会出错:

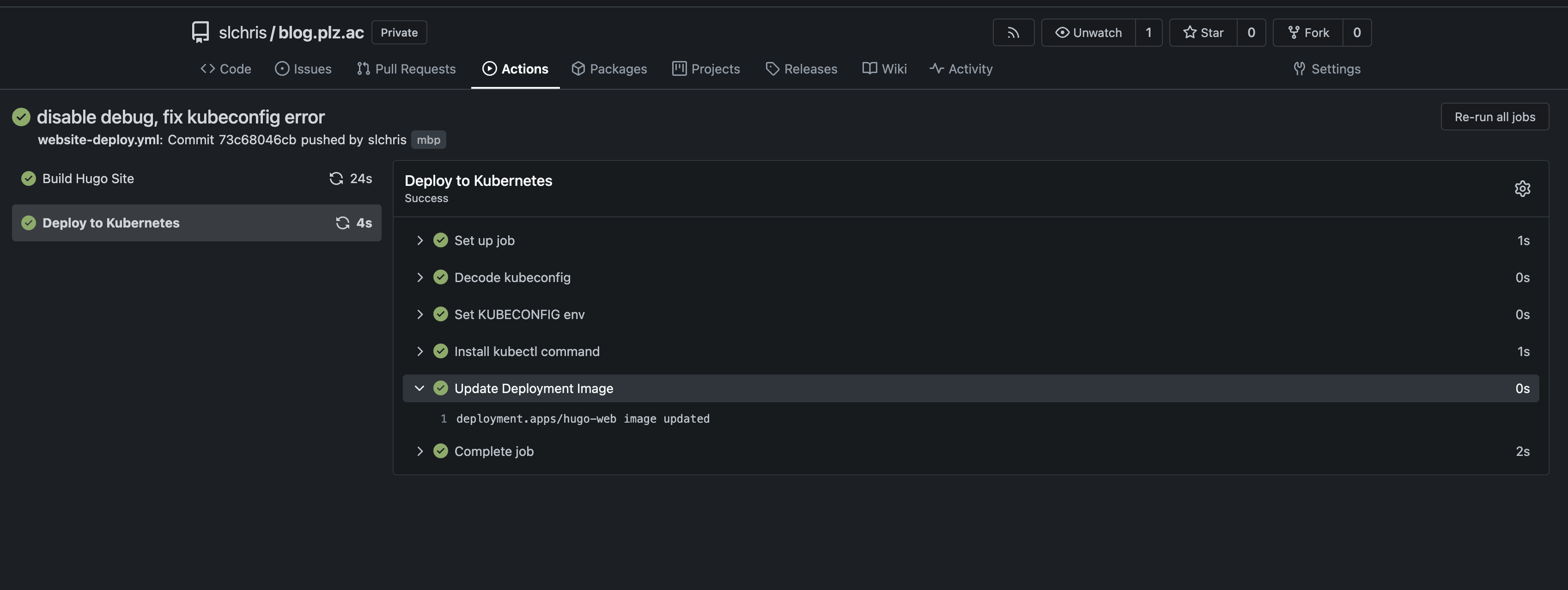

cat ~/.kube/config | base64 -w 0当推送之后就可以看到集群内的镜像已经被更新掉了:

这个其实也是有问题的实际的环境可能说没办法直接连接到k8s集群,另外像是回滚等操作就会涉及到手动和查找之前的镜像id非常的不方便。

GitOps演进

为了解决之前的问题呢这次要引入一个工具就是argo cd来用这个让其更加gitops一些,能够说有发布的记录能够很方便的去回滚等等。

ArgoCD部署

这里同样用helm charts去部署:

global:

domain: cd.infra.plz.ac

image:

repository: quay.plz.ac/argoproj/argocd

server:

enabled: true

service:

type: ClusterIP # 或者 ClusterIP,根据需求

ingress:

ingressClassName: nginx

enabled: true

name: argocd-ingress

path: /

pathType: Prefix

hosts:

- host: cd.infra.plz.ac

paths:

- /

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

cert-manager.io/cluster-issuer: "cloudflare"

tls:

- hosts:

- cd.infra.plz.ac

secretName: argocd-tls

dex:

image:

repository: ghcr.plz.ac/dexidp/dex

configs:

url: "https://cd.infra.plz.ac" # ArgoCD 服务器的 URL

cm:

create: true # 创建 argocd-cm ConfigMap

annotations: {} # 可添加注解

dex.config: | # 添加 dex.config 内容

connectors:

- type: oidc

id: authentik

name: authentik

config:

issuer: https://auth.infra.plz.ac/application/o/argocd/

clientID: xxxx

clientSecret: xxxx

insecureEnableGroups: true

scopes:

- openid

- profile

- email

rbac:

policy.csv: |

g, ArgoCD Admins, role:admin

g, ArgoCD Viewers, role:readonly这里需要注意一下在后续使用的时候需要在authenitk上面创建一个ArgoCD Admins的组,将管理argocd的用户丢到这个组里面。

ArgoCD 配置

本地安装argocd的客户端

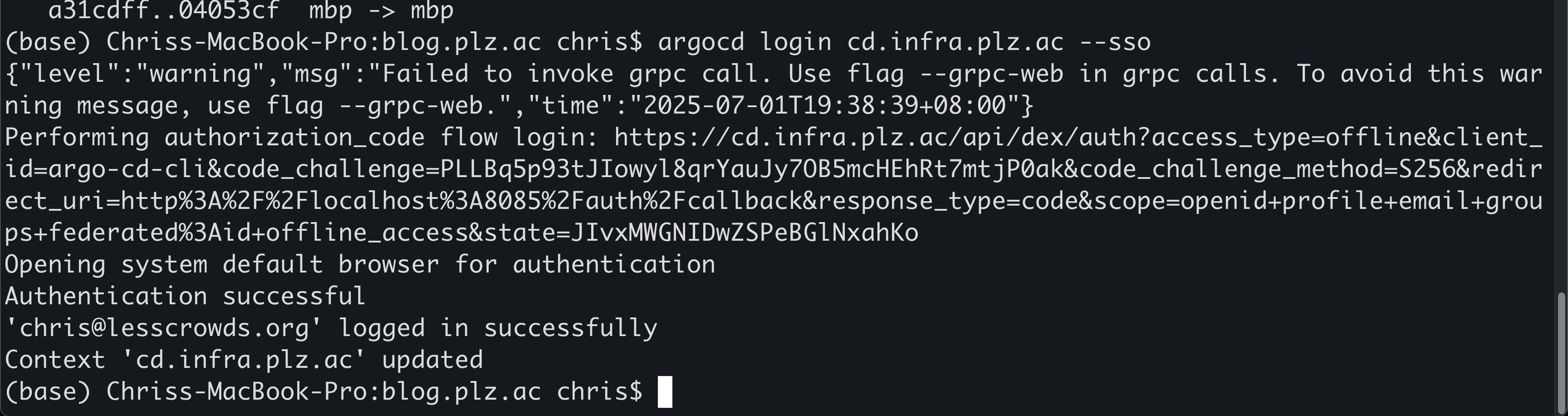

brew install argocd登录到argocd:

argocd login cd.infra.plz.ac --sso --grpc-web

这里会跳到浏览器进行认证,认证成功之后就可以关闭这个页面了。

查看当前用户信息:

argocd account get-user-info --grpc-web内容类似这样:

Logged In: true

Username: [email protected]

Issuer: https://cd.infra.plz.ac/api/dex

Groups: authentik Admins,ArgoCD Admins

需要注意的是这个Groups字段要有ArgoCD Admins不然在管理和创建资源的时候会403

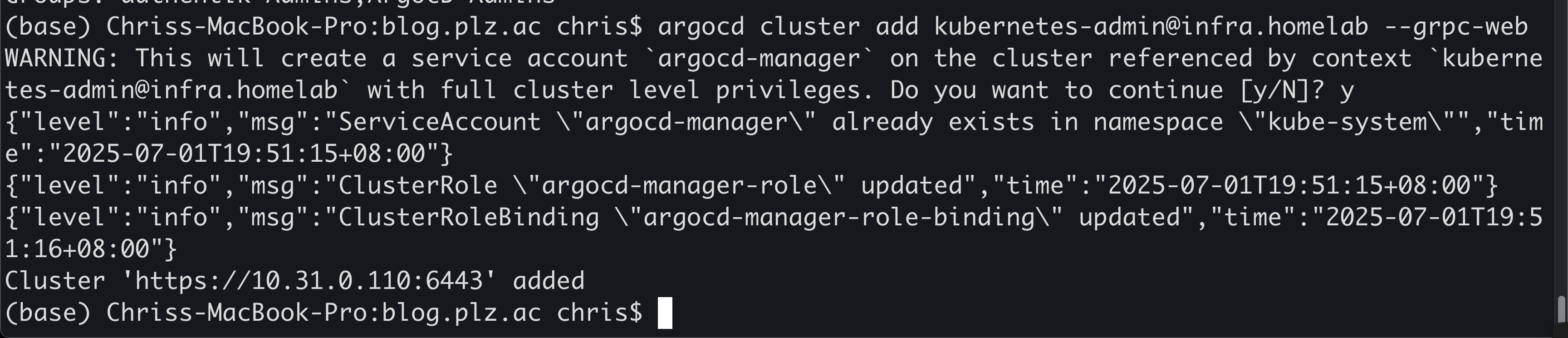

ArgoCD添加集群

接下来我们要给ArgoCD添加一个k8s集群进来。

查看当前的集群context信息:

kubectl config get-contexts如下:

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* [email protected] infra.homelab kubernetes-admin

那么添加可以这么写:

argocd cluster add [email protected] --grpc-web

查看当前的集群:

argocd cluster list --grpc-web如下:

SERVER NAME VERSION STATUS MESSAGE PROJECT

https://10.31.0.110:6443 [email protected] 1.32 Successful

https://kubernetes.default.svc in-cluster Unknown Cluster has no applications and is not being monitored.

到这里集群就算是添加成功了。

infra-blog-deploy

我们这里再来创建一个新的仓库用于管理和维护用于部署和管理镜像版本的一个仓库:

git init infra-blog-deploy目录树如下:

.

├── base

│ ├── deployment.yaml

│ ├── ingress.yaml

│ ├── kustomization.yaml

│ ├── namespace.yaml

│ └── service.yaml

├── overlays

│ └── prod

│ ├── image-patch.yaml

│ └── kustomization.yaml

└── readme.md代码我放了一份镜像在github:https://github.com/slchris/infra-blog-deploy

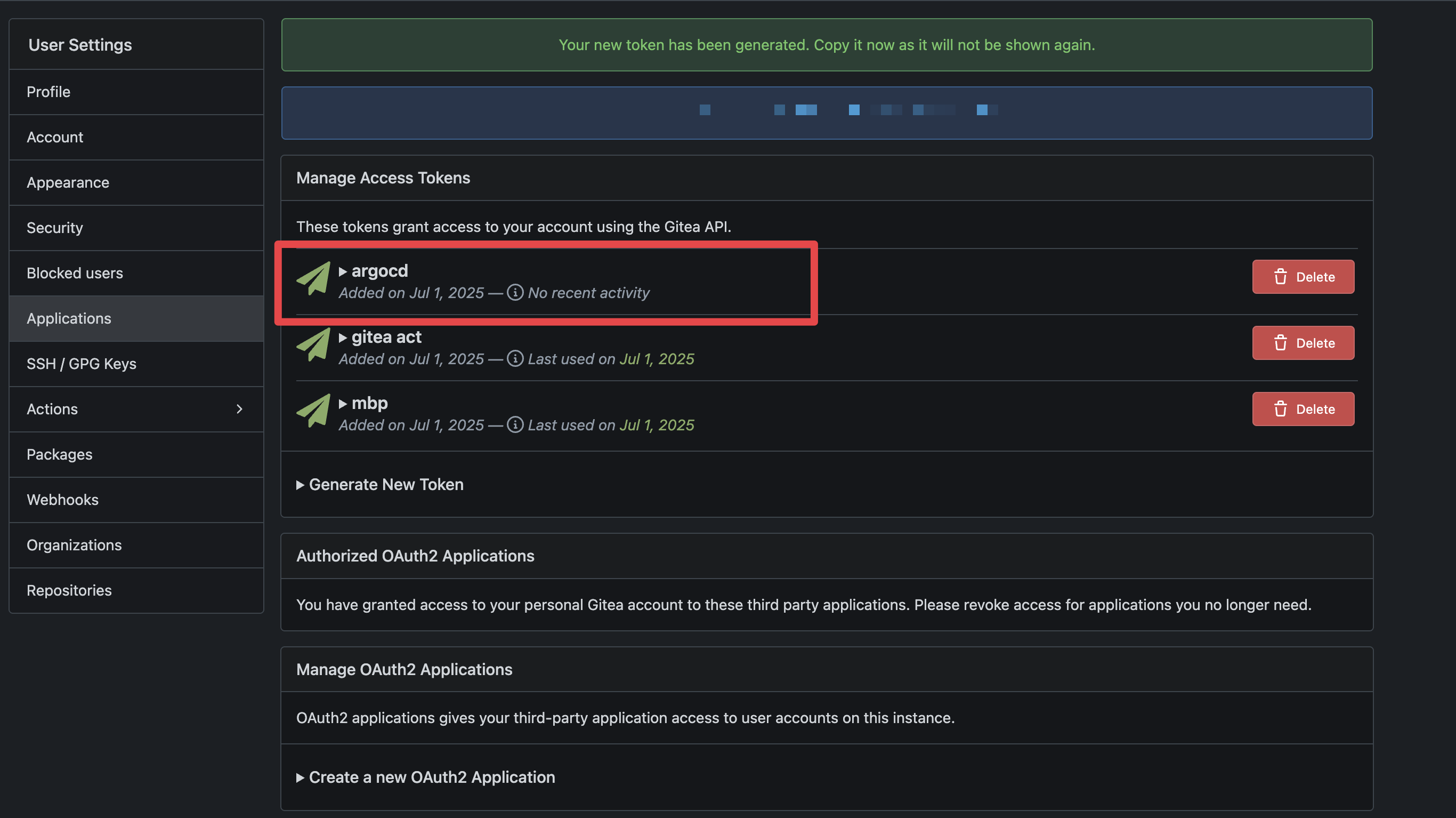

还需要单独给其生成一个token给到Argocd用于能够拉取拉取代码的权限:

添加这个token到argocd:

argocd repo add https://git.infra.plz.ac/slchris/infra-blog-deploy.git \

--username token \

--password xxxxxx \

--name infra-blog 那么接下来就要对blog仓库的workflows进行修改,将直接改k8s的deployment镜像部署改为修改infra-blog-deploy仓库的镜像版本并且commit和push:

# .gitea/workflows/website-deploy.yml

name: Deploy Hugo Site

on:

push:

branches:

- mbp

jobs:

build:

name: Build Hugo Site

# build agent

runs-on: ubuntu-latest

steps:

- uses: https://git.infra.plz.ac/actions/checkout@releases/v4.0.0

- name: Setup Hugo

run: |

wget -c https://mirrors.infra.plz.ac/bin/hugo/v0.147.9/hugo_extended_0.147.9_linux-amd64.tar.gz

tar -xf hugo_extended_0.147.9_linux-amd64.tar.gz

mv hugo /usr/local/bin

hugo version

rm -rf hugo_extended_0.147.9_linux-amd64.tar.gz

# build site

- name: Build Hugo Site

run: |

git submodule update --init

hugo --minify

- name: Set SHORT_SHA

run: echo "SHORT_SHA=$(echo ${{ gitea.sha }} | cut -c1-8)" >> $GITHUB_ENV

- name: Login to Harbor

run: |

echo "${{ secrets.REGISTRY_PASSWORD }}" | docker login harbor.infra.plz.ac -u "${{ secrets.REGISTRY_USERNAME }}" --password-stdin

- name: Build and Push Hugo Images

run: |

docker build -t harbor.infra.plz.ac/slchris/blog:${SHORT_SHA} .

docker push harbor.infra.plz.ac/slchris/blog:${SHORT_SHA}

update:

name: Update image to infra-blog-deploy repo

runs-on: ubuntu-latest

needs: build

steps:

- name: Set SHORT_SHA

run: echo "SHORT_SHA=$(echo ${{ gitea.sha }} | cut -c1-8)" >> $GITHUB_ENV

- name: Clone GitOps repo

env:

GIT_TOKEN: ${{ secrets.GITOPS_TOKEN }}

run: |

git clone https://token:${GIT_TOKEN}@git.infra.plz.ac/slchris/infra-blog-deploy.git

cd infra-blog-deploy

git config user.name "Gitea Bot"

git config user.email "[email protected]"

- name: Update image tag in image-patch.yaml

run: |

cd infra-blog-deploy/overlays/prod

echo "🔧 Updating image to harbor.infra.plz.ac/slchris/blog:${SHORT_SHA}"

sed -i "s|image: .*|image: harbor.infra.plz.ac/slchris/blog:${SHORT_SHA}|" image-patch.yaml

- name: Commit and Push update

env:

GIT_TOKEN: ${{ secrets.GITOPS_TOKEN }}

run: |

cd infra-blog-deploy

git add overlays/prod/image-patch.yaml

git commit -m "update: hugo-web image to ${SHORT_SHA}"

git push origin main你可以看到我这里还用到了一个新的secrets这个也需要在profile里面去创建新的token并且需要有写入仓库的权限,同时还要记录在gitea action的名为GITOPS_TOKEN里面防止无法push到infra-blog-deploy仓库。

在push之后就可以看到已经被触发构建和成功push到仓库了:

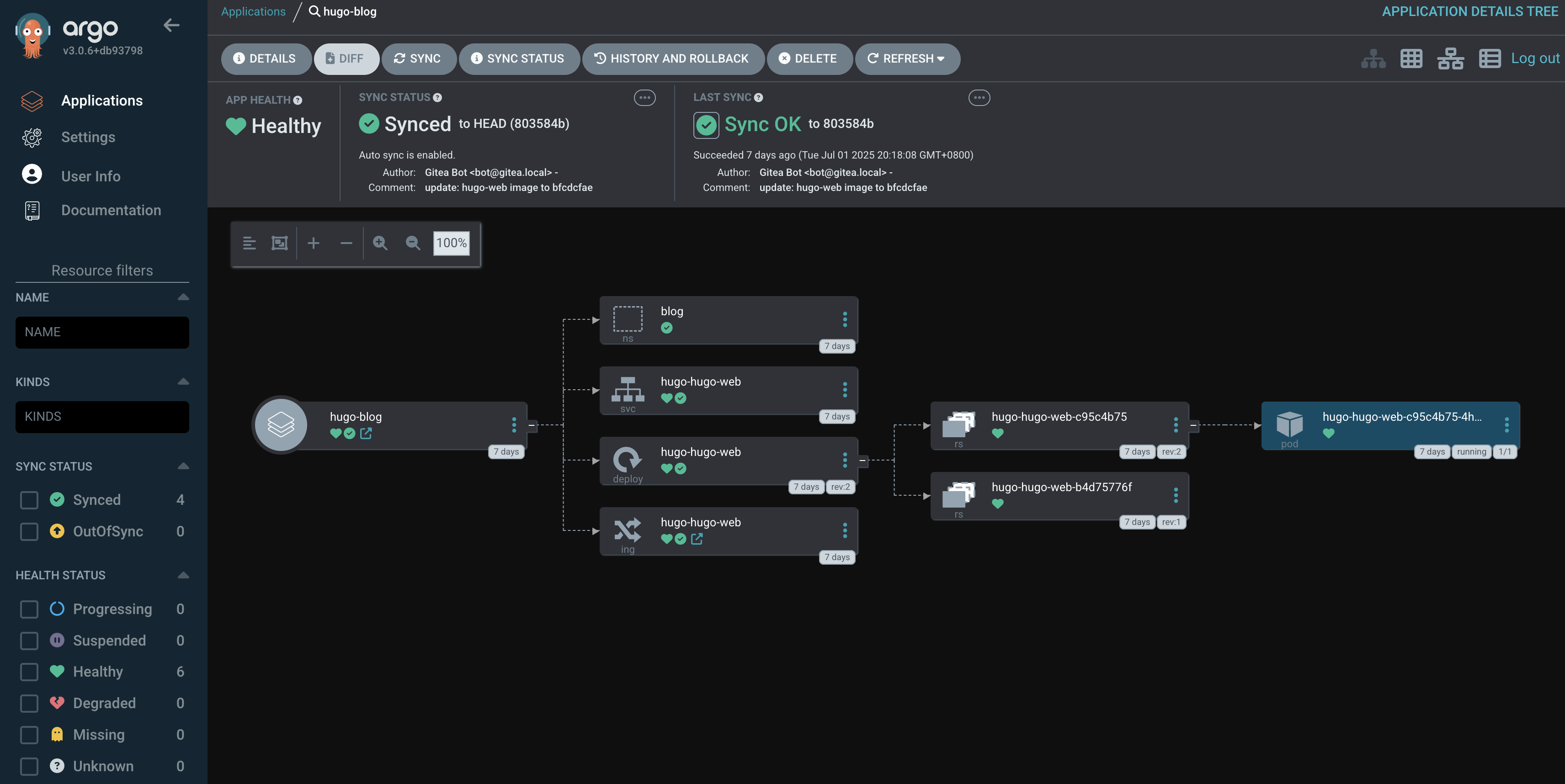

ArgoCD部署应用

接下来我们就可以在argocd里面去添加应用,来让其可以自动同步和更新服务到k8s集群:

argocd app create hugo-blog \

--repo https://git.infra.plz.ac/slchris/infra-blog-deploy.git \

--path overlays/prod \

--dest-server https://10.31.0.110:6443 \

--dest-namespace blog \

--sync-policy automated \

--self-heal \

--nameprefix hugo-查看应用的状态:

argocd app list如下:

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argo-cd/hugo-blog https://10.31.0.110:6443 blog default Synced Healthy Auto <none> https://git.infra.plz.ac/slchris/infra-blog-deploy.git overlays/prod

也可以通过web ui去查看:

发布策略

目前是优先发布到内网去查看渲染的效果以及是否有错误,等到准确无误之后推送到github进行部署。

这里是通过两个不同的git remote来实现的,最终部署的分支都是main分支。

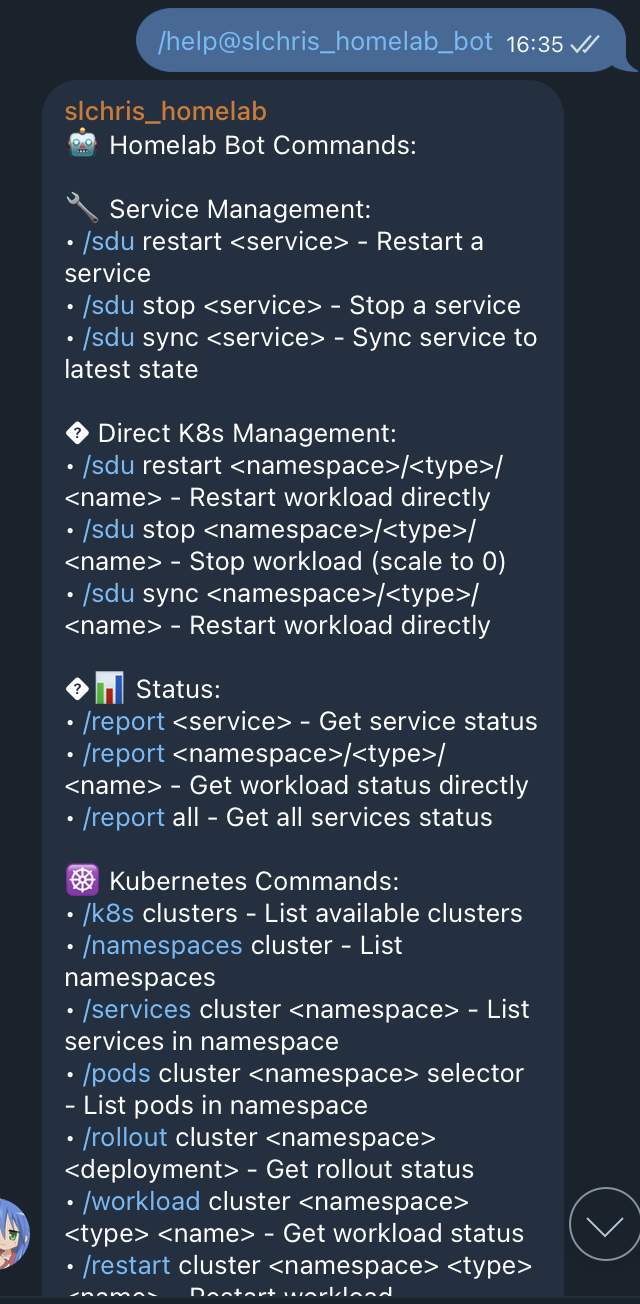

ChatOps 演进

在实际的生产中交付很频繁很难说都没次更新都会去看这么几个页面的大部分都是通过bot通知或者是邮件通知的形式来告知这个事件。

这里就要去配置相应的通知渠道,每当镜像构建成功的时候就会有通知。以及使用argo cd的通知插件在以下事件的时候都会有通知:

- 应用同步成功

- 应用同步失败

- 应用回滚

- 应用同步开始

这块的通知还会涉及到一个部分就是网络可能不通要如何进行转发,这块就会涉及到部署一个http的代理服务用于帮我们去访问相应的api。

这个只是通知的部分还要有能够操作的部分:

- 更新服务

- 回滚服务

- 重启服务

- 查看失败原因等

这里我写了一个基础的bot,来实现这些功能。

可观测体系的建设

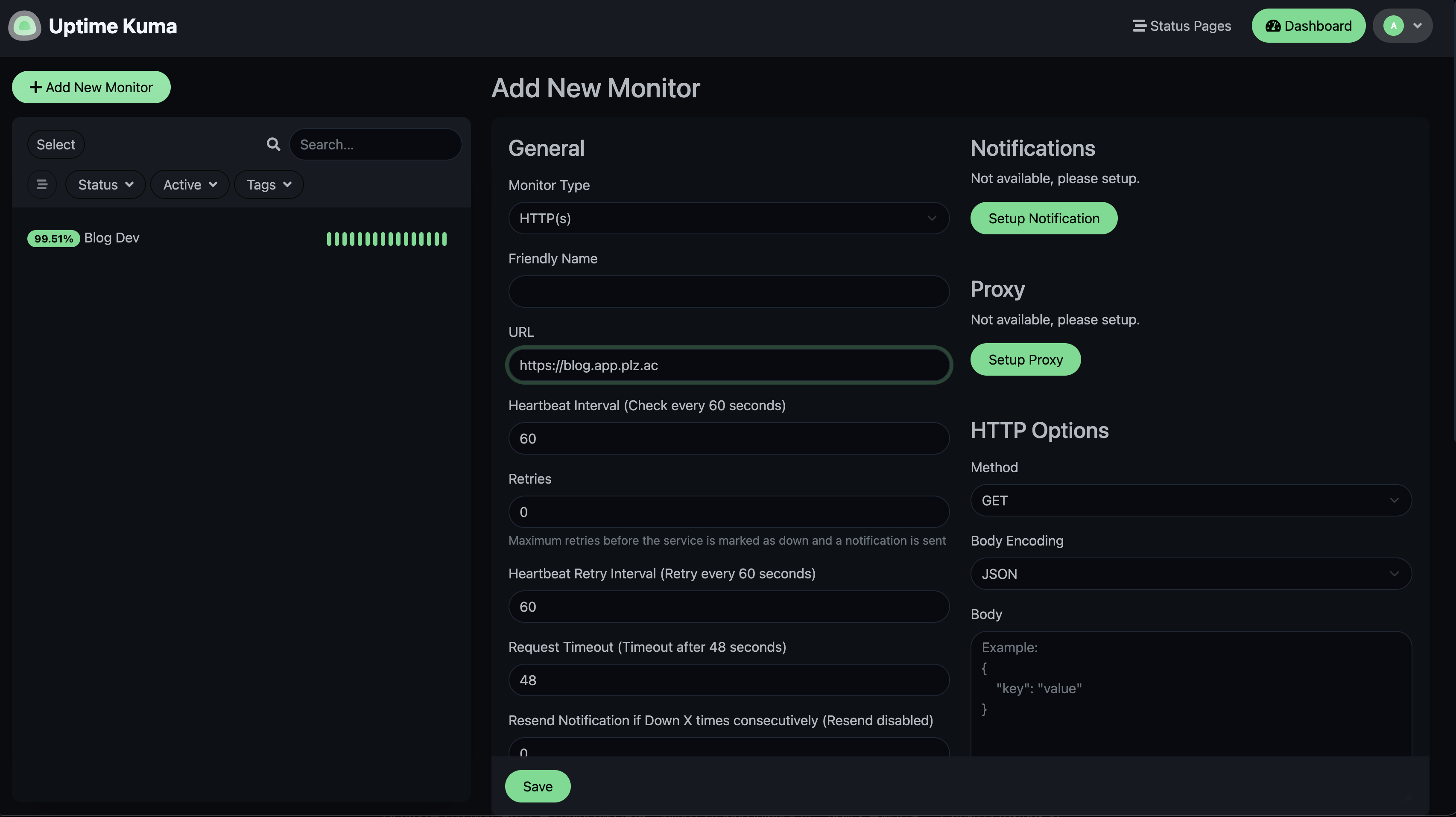

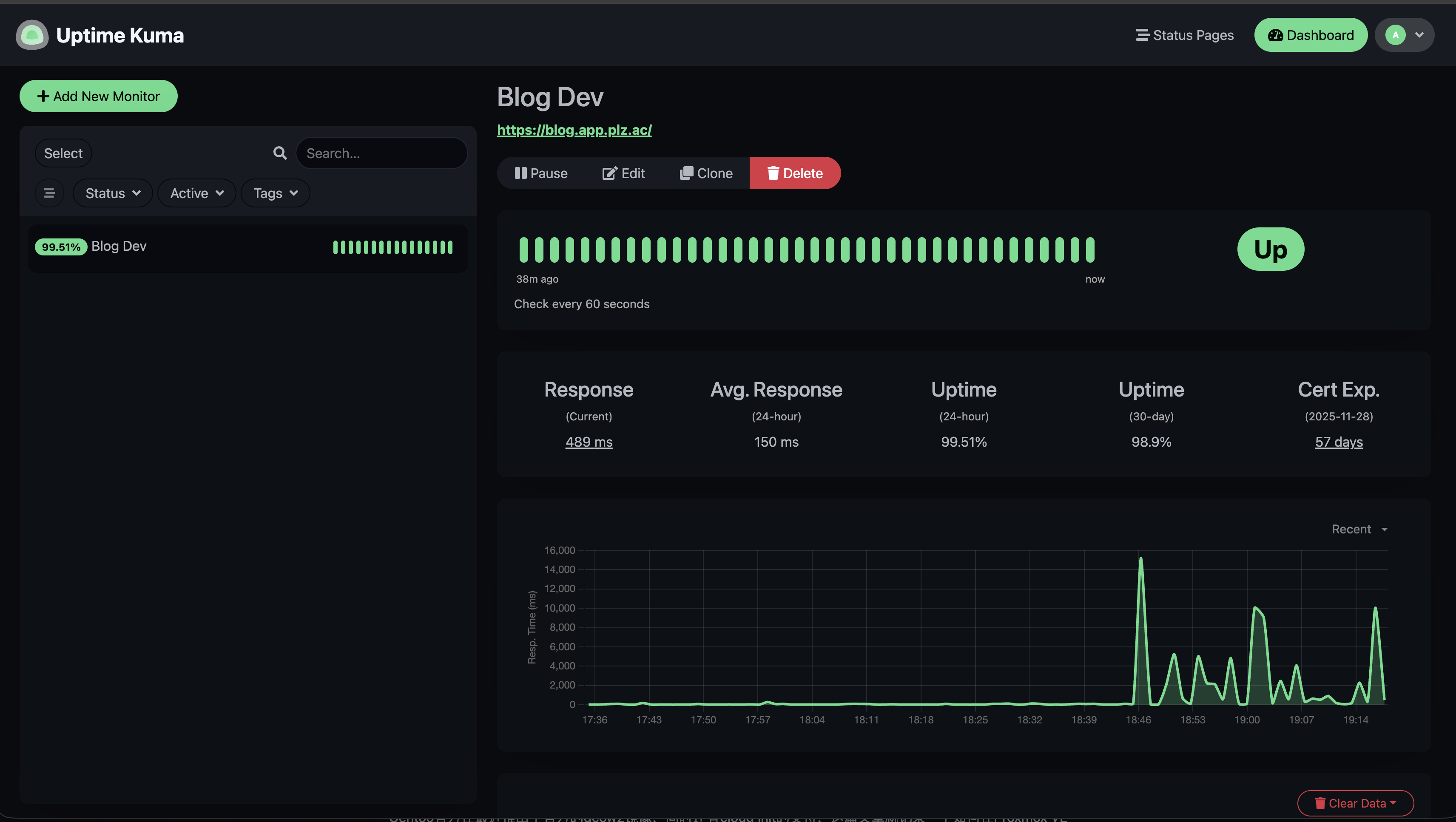

Uptime Kuma

使用uptime kuma来检测站点的存活情况,首先要下载镜像到内网的harbor

docker pull docker-proxy.plz.ac/louislam/uptime-kuma:1

docker tag docker-proxy.plz.ac/louislam/uptime-kuma:1 harbor.infra.plz.ac/louislam/uptime-kuma:1

docker push harbor.infra.plz.ac/louislam/uptime-kuma:1对应的部署文件:

apiVersion: v1

kind: Namespace

metadata:

name: uptime-kuma

---

apiVersion: v1

kind: Service

metadata:

name: uptime-kuma

namespace: uptime-kuma

spec:

ports:

- name: web-ui

protocol: TCP

port: 3001

targetPort: 3001

clusterIP: None

selector:

app: uptime-kuma

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: uptime-kuma-pvc

namespace: uptime-kuma

spec:

accessModes:

- ReadWriteOnce

storageClassName: csi-rbd-sc

resources:

requests:

storage: 128Mi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: uptime-kuma

namespace: uptime-kuma

spec:

selector:

matchLabels:

app: uptime-kuma

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

template:

metadata:

labels:

app: uptime-kuma

spec:

containers:

- name: uptime-kuma

image: harbor.infra.plz.ac/louislam/uptime-kuma:1

imagePullPolicy: IfNotPresent

env:

# only need to set PUID and PGUI because of NFS server

- name: PUID

value: "1000"

- name: PGID

value: "1000"

ports:

- containerPort: 3001

name: web-ui

resources:

limits:

cpu: 200m

memory: 512Mi

requests:

cpu: 50m

memory: 128Mi

livenessProbe:

tcpSocket:

port: web-ui

initialDelaySeconds: 60

periodSeconds: 10

readinessProbe:

httpGet:

scheme: HTTP

path: /

port: web-ui

initialDelaySeconds: 30

periodSeconds: 10

volumeMounts:

- name: data

mountPath: /app/data

volumes:

- name: data

persistentVolumeClaim:

claimName: uptime-kuma-pvc

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: uptime-kuma-ingress

namespace: uptime-kuma

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

cert-manager.io/cluster-issuer: cloudflare

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

spec:

ingressClassName: nginx

rules:

- host: uptime.app.plz.ac

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: uptime-kuma

port:

number: 3001

tls:

- hosts:

- uptime.app.plz.ac

secretName: uptime-kuma-tls 应用:

kubectl apply -f uptime-kuma.yaml

添加站点:

查看对应的存活情况:

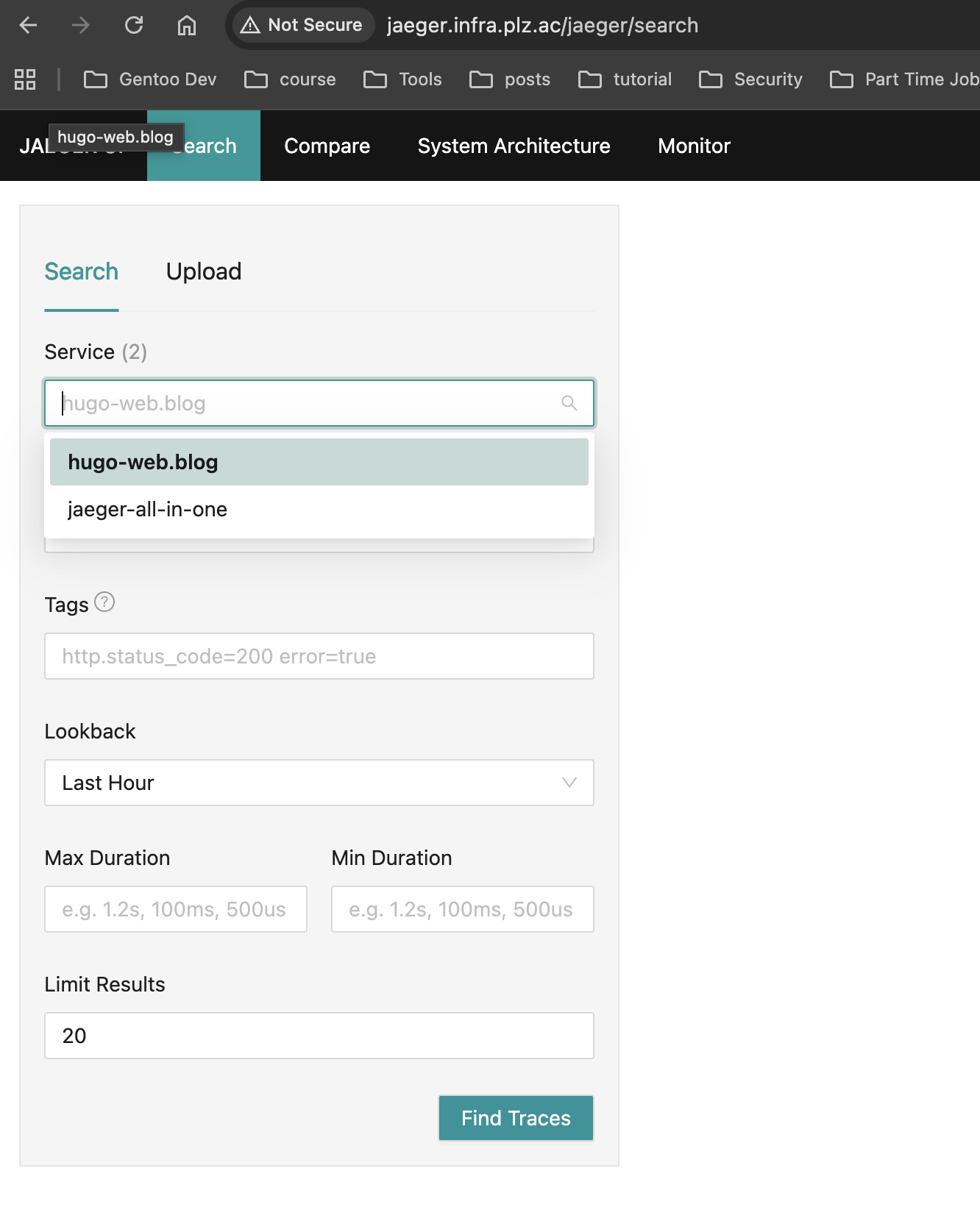

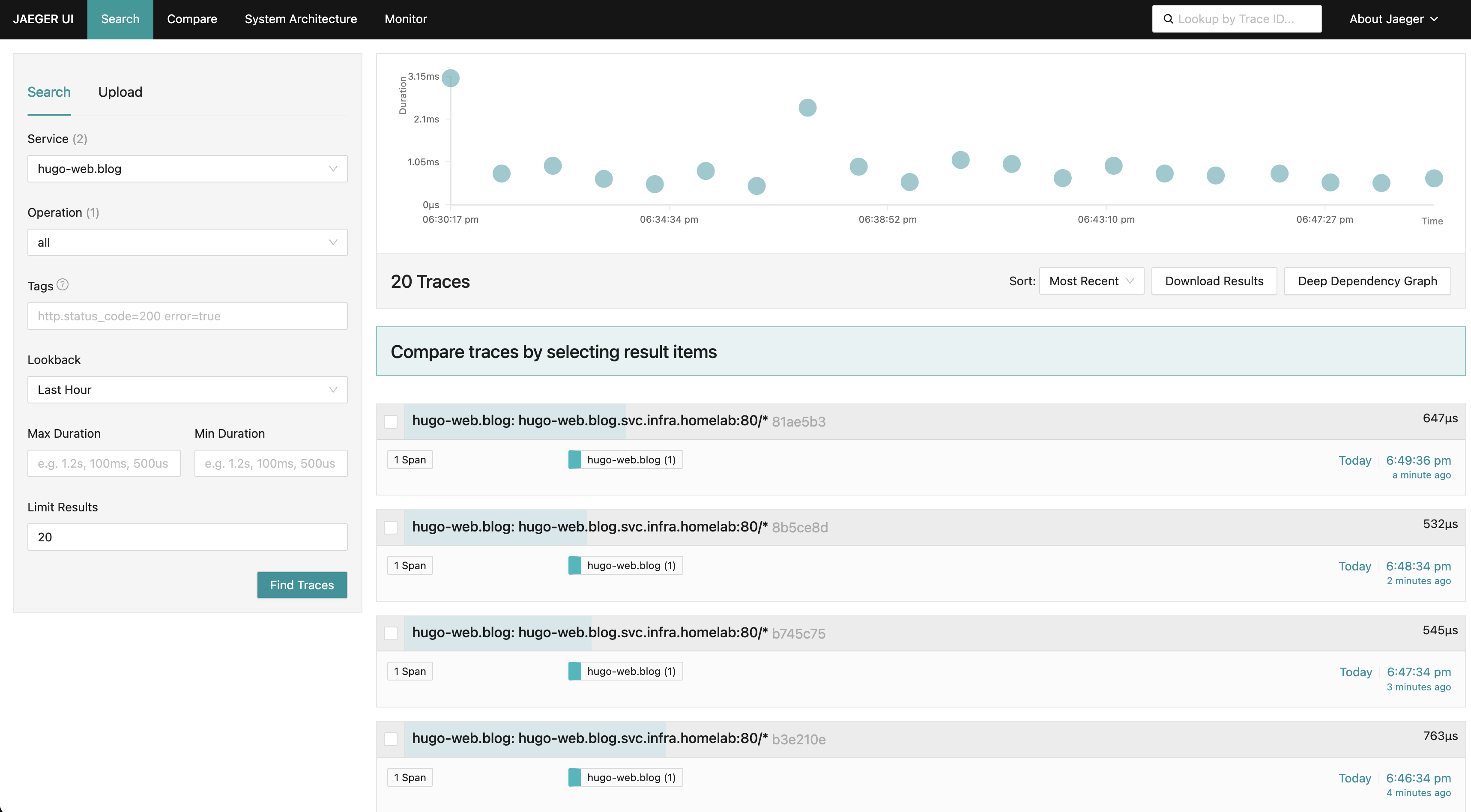

Istio Tracing

目前这个只是一个简单的静态站点这里用istio来实现这个链路追踪,并不是通过istio gateway来访问这两种链路是不一样的。

生成yaml文件:

istioctl manifest generate > istio-manifest.yaml

grep 'image:' istio-demo-manifest.yaml | awk '{print $2}' | sed 's/"//g' | sort -u > image-list.txt

cat image-list.txt

pull所有的镜像到本地:

docker pull hub.infra.plz.ac/library/busybox:1.28

docker tag hub.infra.plz.ac/library/busybox:1.28 harbor.infra.plz.ac/library/busybox:1.28

docker push harbor.infra.plz.ac/library/busybox:1.28

docker pull hub.infra.plz.ac/istio/proxyv2:1.26.0

docker tag hub.infra.plz.ac/istio/proxyv2:1.26.0 harbor.infra.plz.ac/istio/proxyv2:1.26.0

docker push harbor.infra.plz.ac/istio/proxyv2:1.26.0

docker pull hub.infra.plz.ac/istio/pilot:1.26.0

docker tag hub.infra.plz.ac/istio/pilot:1.26.0 harbor.infra.plz.ac/istio/pilot:1.26.0

docker push harbor.infra.plz.ac/istio/pilot:1.26.0

修改istio-demo-manifest.yaml文件都改成内网harbor的仓库地址后部署:

kubectl apply -f istio-demo-manifest.yaml

打标:

kubectl label namespace blog istio-injection=enabled

重启:

kubectl rollout restart deployment hugo-hugo-web -n blog

查看状态:

kubectl get pods -n blog

可以看到现在是变成2/2了不是原来的1/1了:

NAME READY STATUS RESTARTS AGE

hugo-hugo-web-848967b77f-szs56 2/2 Running 0 11s

部署jaegerallinone来作为测试,生产环境不建议这么配置。

先下载镜像到本地的harbor:

docker pull hub.infra.plz.ac/jaegertracing/all-in-one:1.70.0

docker tag hub.infra.plz.ac/jaegertracing/all-in-one:1.70.0 harbor.infra.plz.ac/jaegertracing/all-in-one:1.70.0

docker push harbor.infra.plz.ac/jaegertracing/all-in-one:1.70.0

对应的yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: jaeger

namespace: istio-system

labels:

app: jaeger

spec:

selector:

matchLabels:

app: jaeger

template:

metadata:

labels:

app: jaeger

sidecar.istio.io/inject: "false"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "14269"

spec:

containers:

- name: jaeger

image: "harbor.infra.plz.ac/jaegertracing/all-in-one:1.70.0"

env:

- name: BADGER_EPHEMERAL

value: "false"

- name: SPAN_STORAGE_TYPE

value: "badger"

- name: BADGER_DIRECTORY_VALUE

value: "/badger/data"

- name: BADGER_DIRECTORY_KEY

value: "/badger/key"

- name: COLLECTOR_ZIPKIN_HOST_PORT

value: ":9411"

- name: MEMORY_MAX_TRACES

value: "50000"

- name: QUERY_BASE_PATH

value: /jaeger

livenessProbe:

httpGet:

path: /

port: 14269

readinessProbe:

httpGet:

path: /

port: 14269

volumeMounts:

- name: data

mountPath: /badger

resources:

requests:

cpu: 10m

volumes:

- name: data

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: tracing

namespace: istio-system

labels:

app: jaeger

spec:

type: ClusterIP

ports:

- name: http-query

port: 80

protocol: TCP

targetPort: 16686

# Note: Change port name if you add '--query.grpc.tls.enabled=true'

- name: grpc-query

port: 16685

protocol: TCP

targetPort: 16685

selector:

app: jaeger

---

# Jaeger implements the Zipkin API. To support swapping out the tracing backend, we use a Service named Zipkin.

apiVersion: v1

kind: Service

metadata:

labels:

name: zipkin

name: zipkin

namespace: istio-system

spec:

ports:

- port: 9411

targetPort: 9411

name: http-query

selector:

app: jaeger

---

apiVersion: v1

kind: Service

metadata:

name: jaeger-collector

namespace: istio-system

labels:

app: jaeger

spec:

type: ClusterIP

ports:

- name: jaeger-collector-http

port: 14268

targetPort: 14268

protocol: TCP

- name: jaeger-collector-grpc

port: 14250

targetPort: 14250

protocol: TCP

- port: 9411

targetPort: 9411

name: http-zipkin

- port: 4317

name: grpc-otel

- port: 4318

name: http-otel

selector:

app: jaeger

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: jaeger-ingress

namespace: istio-system

spec:

ingressClassName: nginx

rules:

- host: jaeger.infra.plz.ac

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tracing

port:

number: 80应用:

kubectl create -f jaeger.yaml

还需要改一下istio的配置:

kubectl edit configmap istio -n istio-system

需要在defaultProviders下面添加如下:

defaultProviders:

metrics:

- prometheus

tracing:

- jaeger保存退出之后重启一下hugo站点:

kubectl rollout restart deployment hugo-hugo-web -n blog

在重启完成之后打开 http://jaeger.infra.plz.ac 查看对应的服务是否可以看到hugo的服务:

查看对应的追踪链路情况:

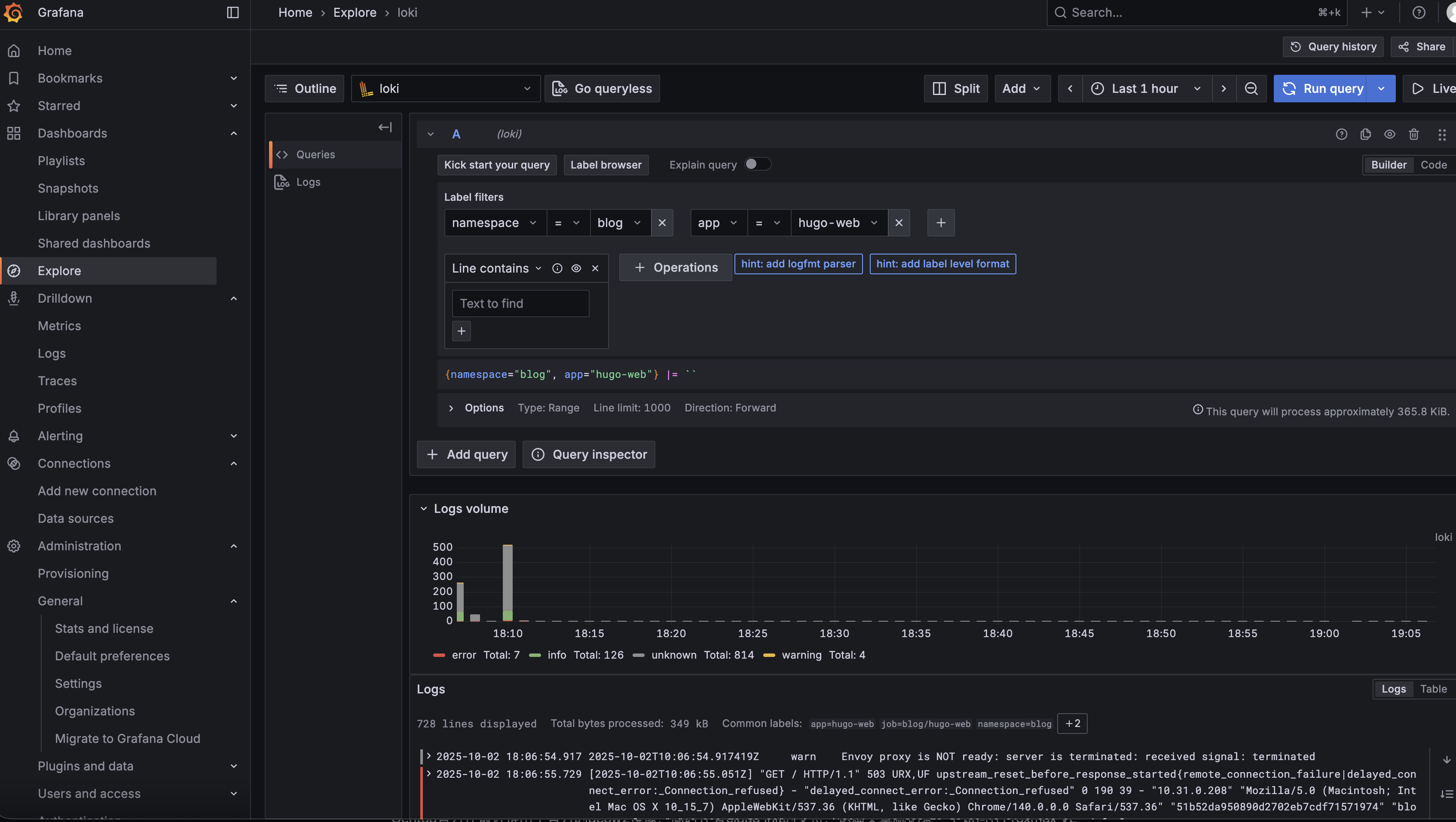

日志

规范nginx的日志json格式 -> loki去采集

nginx的配置修改如下:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format logfmt 'ip=$remote_addr xff=$http_x_forwarded_for '

'method=$request_method '

'uri=$request_uri '

'status=$status '

'referer=$http_referer '

'ua="$http_user_agent" '

'rt=$request_time '

'upstream_time=$upstream_response_time '

'bytes_sent=$body_bytes_sent';

access_log /var/log/nginx/access.log logfmt;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

server_tokens off;

# real ip

real_ip_header X-Forwarded-For;

set_real_ip_from 0.0.0.0/0;

real_ip_recursive on;

# gzip

gzip on;

gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

gzip_min_length 1024;

include /etc/nginx/conf.d/*.conf;

}

再去修改一下Dockerfile:

# hugo dockerfiles

FROM harbor.infra.plz.ac/library/nginx

LABEL maintainer="Chris Su <[email protected]>"

COPY public /usr/share/nginx/html

COPY nginx.conf /etc/nginx/nginx.conf

COPY default.conf /etc/nginx/conf.d/default.conf

重新触发流水线即可。

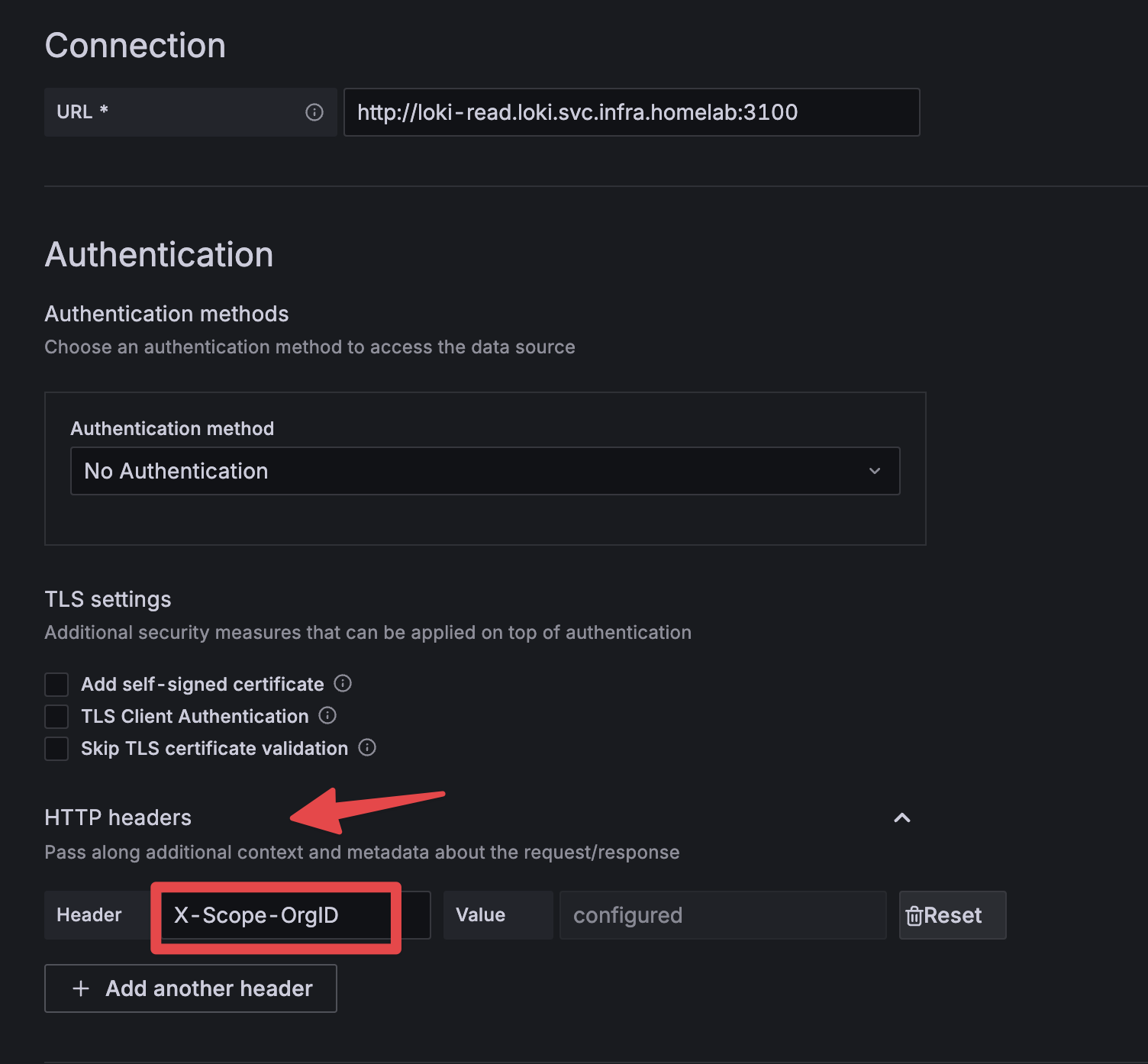

loki 部署

创建bucket:

s3cmd mb s3://loki对应的vaules.yaml

global:

clusterDomain: infra.homelab

dnsService: "kube-dns"

image:

registry: docker-proxy.plz.ac

loki:

image:

registry: docker-proxy.plz.ac

storage:

type: s3

bucketNames:

chunks: loki

ruler: loki

admin: loki

s3:

endpoint: s3.infra.plz.ac

region: us-east-1

accessKeyId: QF43E82P74MW66KMK16X

secretAccessKey: J5F9AWZ7DgtY8f9qCU8DyxVcd8sQCLHdeGWmPPCS

s3ForcePathStyle: true

insecure: false

schemaConfig:

configs:

- from: 2024-01-01

store: tsdb

object_store: s3

schema: v13

index:

prefix: index_

period: 24h

gateway:

enabled: false

lokiCanary:

image:

registry: docker-proxy.plz.ac

memcached:

image:

repository: docker-proxy.plz.ac/library/memcached

chunksCache:

resources:

requests:

cpu: 1000m

memory: 256Mi

limits:

cpu: 2000m

memory: 2048Mi

memcachedExporter:

image:

repository: docker-proxy.plz.ac/prom/memcached-exporter

sidecar:

image:

repository: docker-proxy.plz.ac/kiwigrid/k8s-sidecar

部署:

kubectl create ns loki

helm install loki grafana/loki -f vaules.yaml -n loki

在grafana上添加loki的时候发现还要有个特殊的头:

X-Scope-OrgID : 1

统计发现不是真实的ip还需要调整nginx.conf 和ingress的配置

kubectl patch svc ingress-nginx -n ingress-nginx -p '{"spec":{"externalTrafficPolicy":"Local"}}'

查看访问日志:

安全相关的建设

集群安全 WIP

- OPA

- Kyverno

- 镜像签名

结语

从简单的 Hugo 构建开始,我们逐步演进出一整套可回滚、可通知、可监控的 CI/CD 体系。这不仅适用于 Homelab,也能为小型团队或私有环境提供一个可靠的参考架构。

如果你觉得这篇文章对你有所帮助,欢迎赞赏~

赞赏